VALSE2020 重要主题年度进展回顾 (APR)

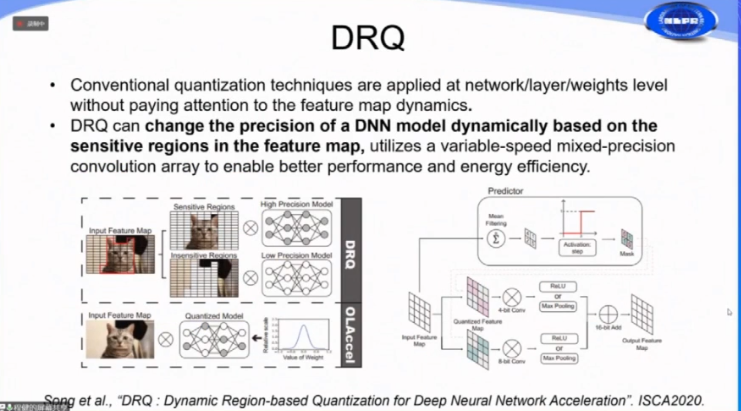

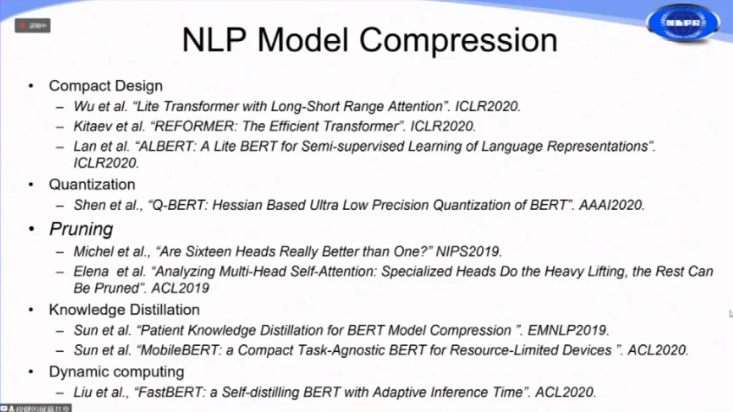

模型压缩

- Rethinking Network Pruning

- Lottery Ticket Hypothesis

- Rethinking the Value of Network Pruning

- pruning is an architecture search paradigm

- Pruning from Scratch (AAAI2020)

- Data-free Compression

- Data-free Learning of Student Networks (ICCV2019)

- GAN 生成训练样本

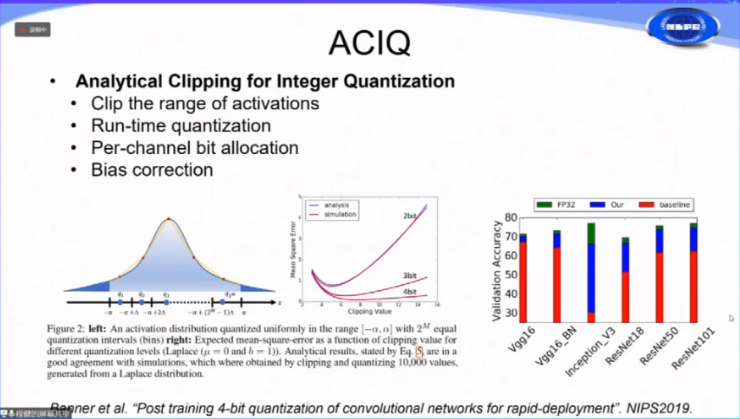

- Post trainning 4-bit quentization of concolutional networks for rapid-deployment (NIPS2019)

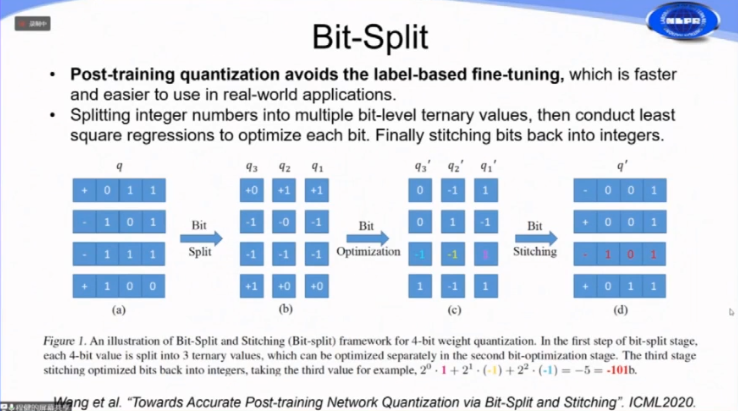

- Towards accurate post-training network quantization via bit-split stitching (ICML2020)

- Data-free Learning of Student Networks (ICCV2019)

NAS for compact networks

- NLP compression

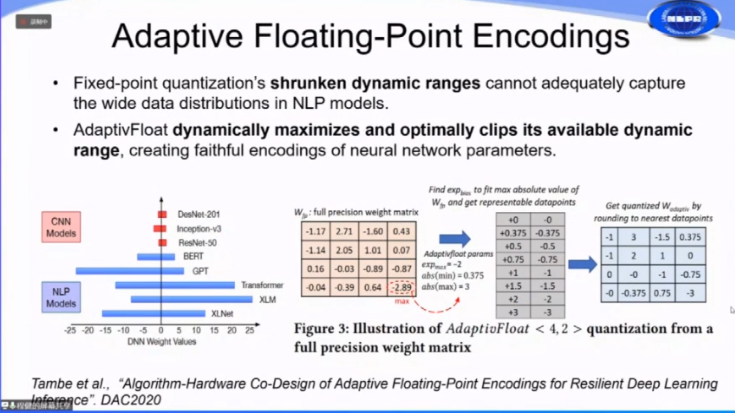

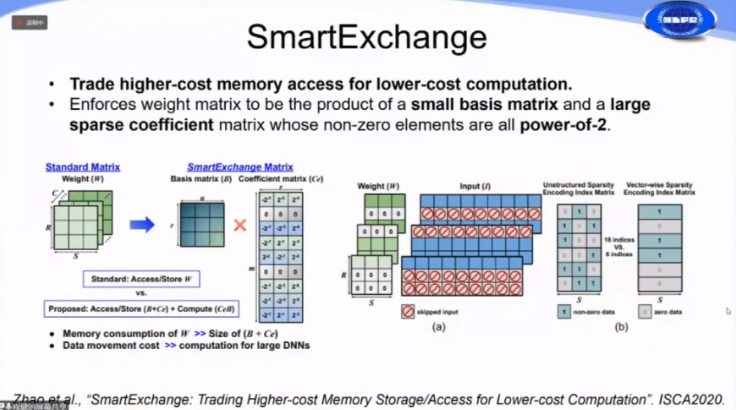

- Hardware-software Co-design

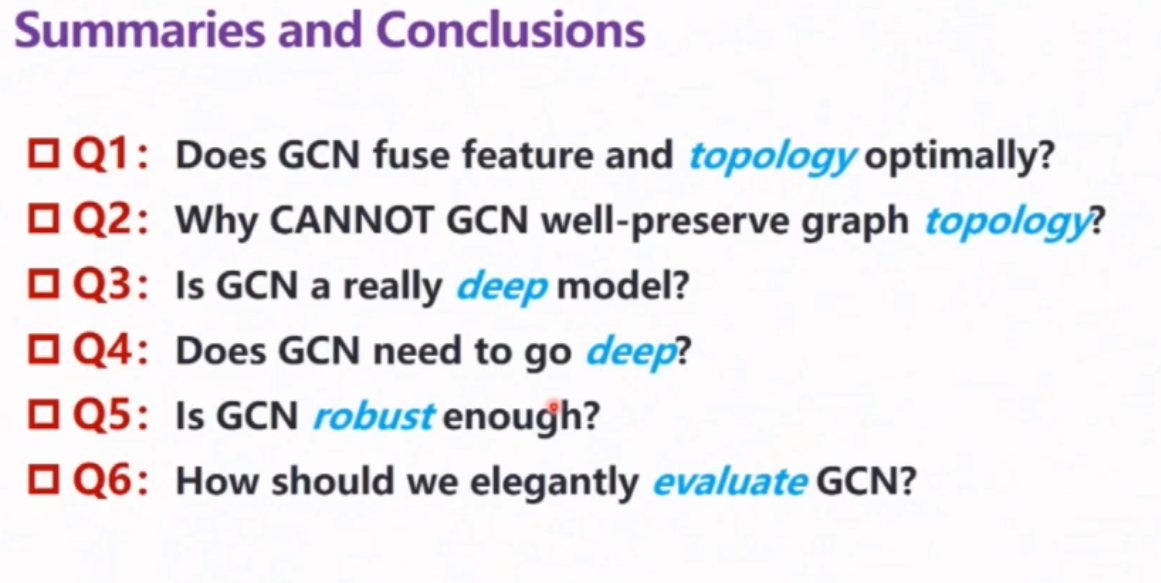

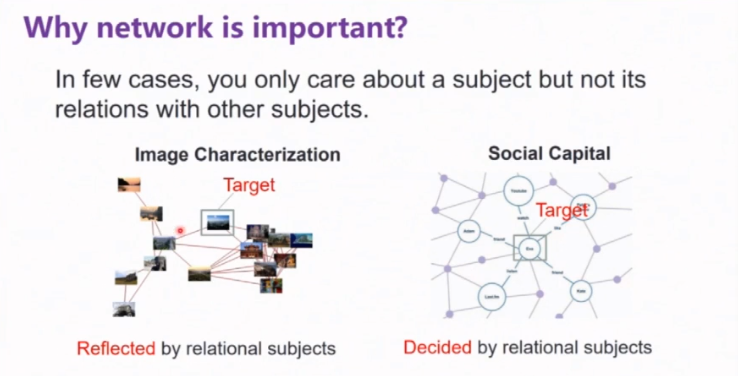

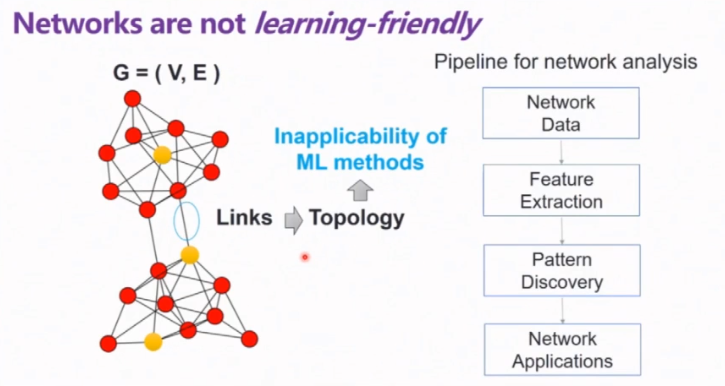

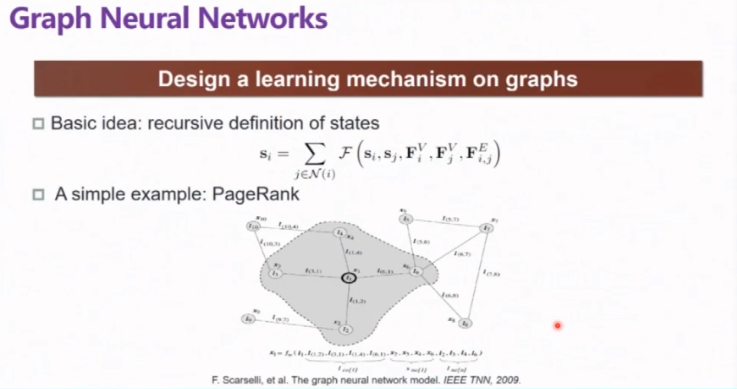

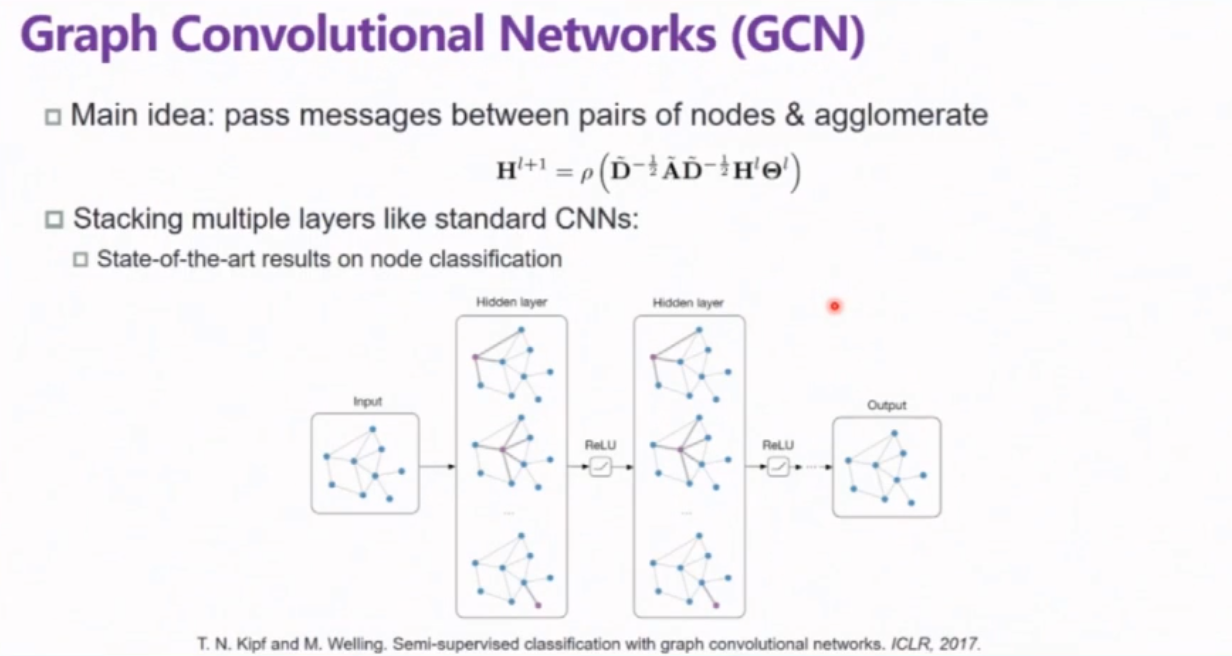

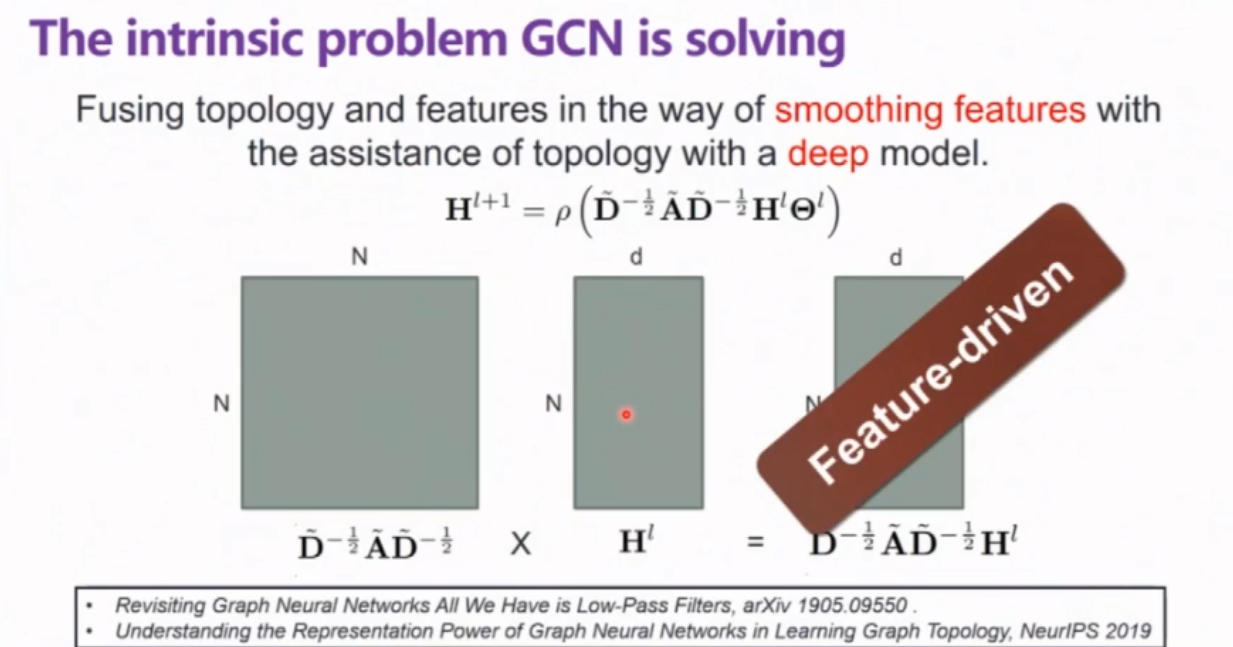

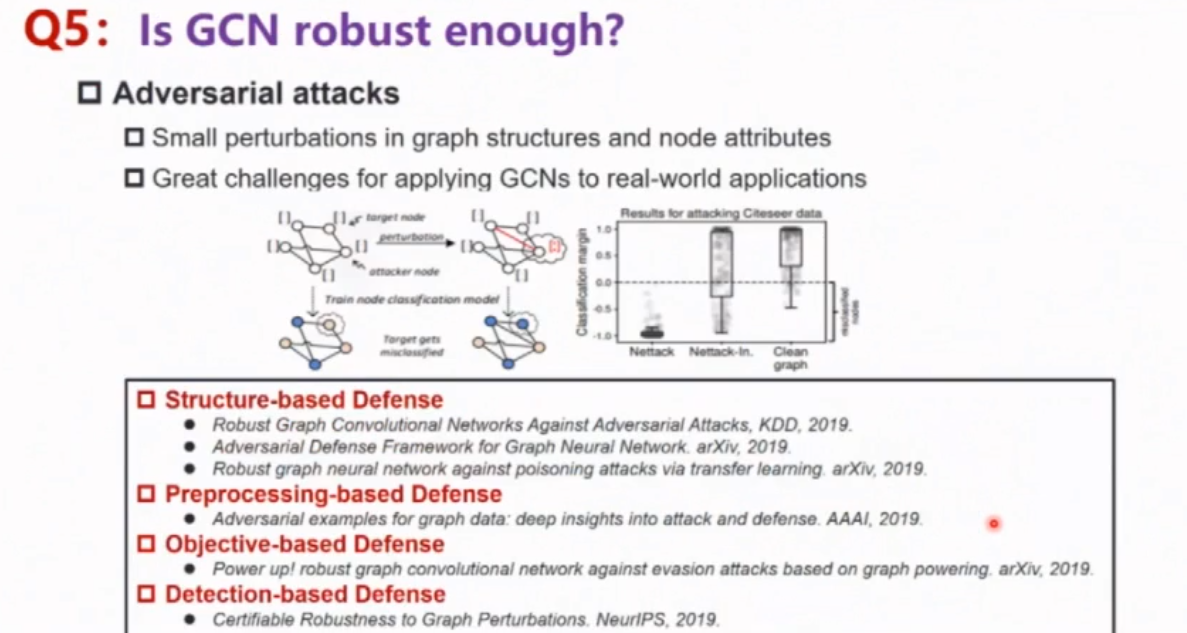

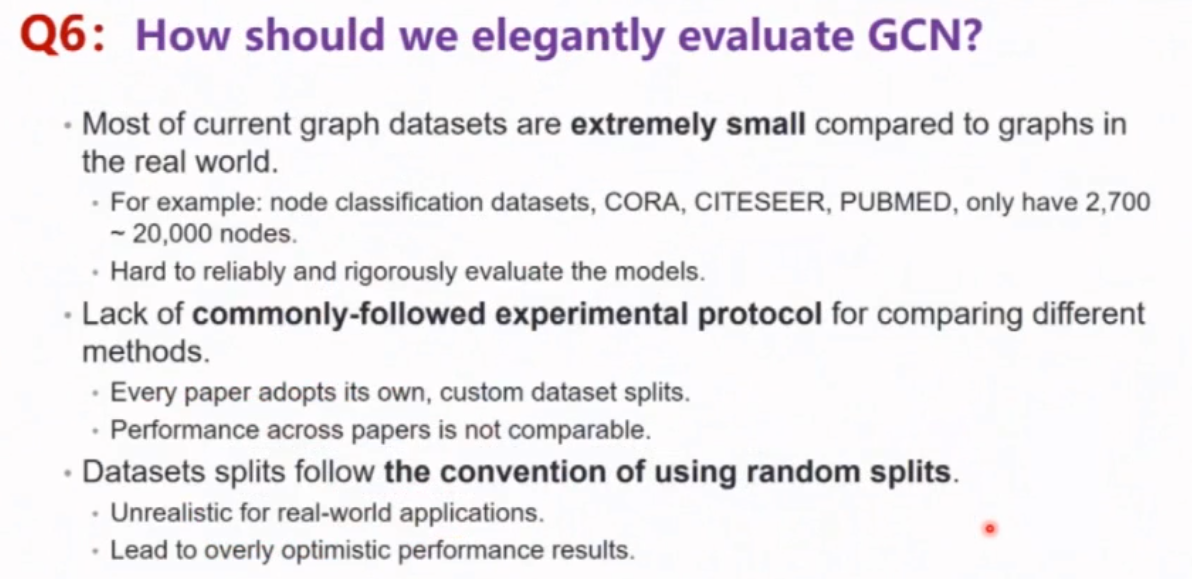

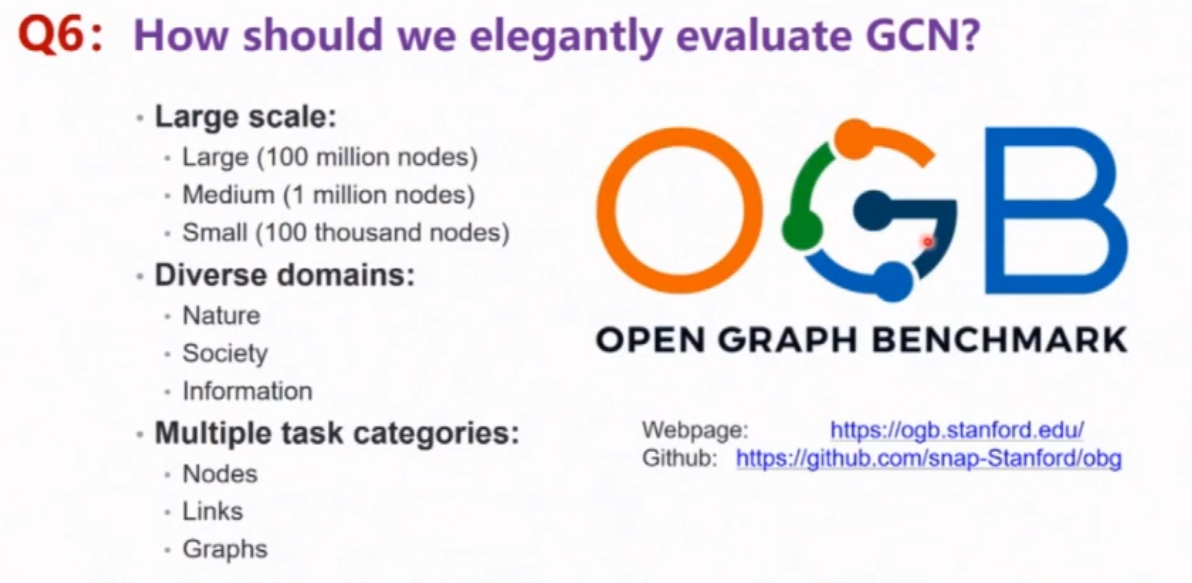

图神经网络

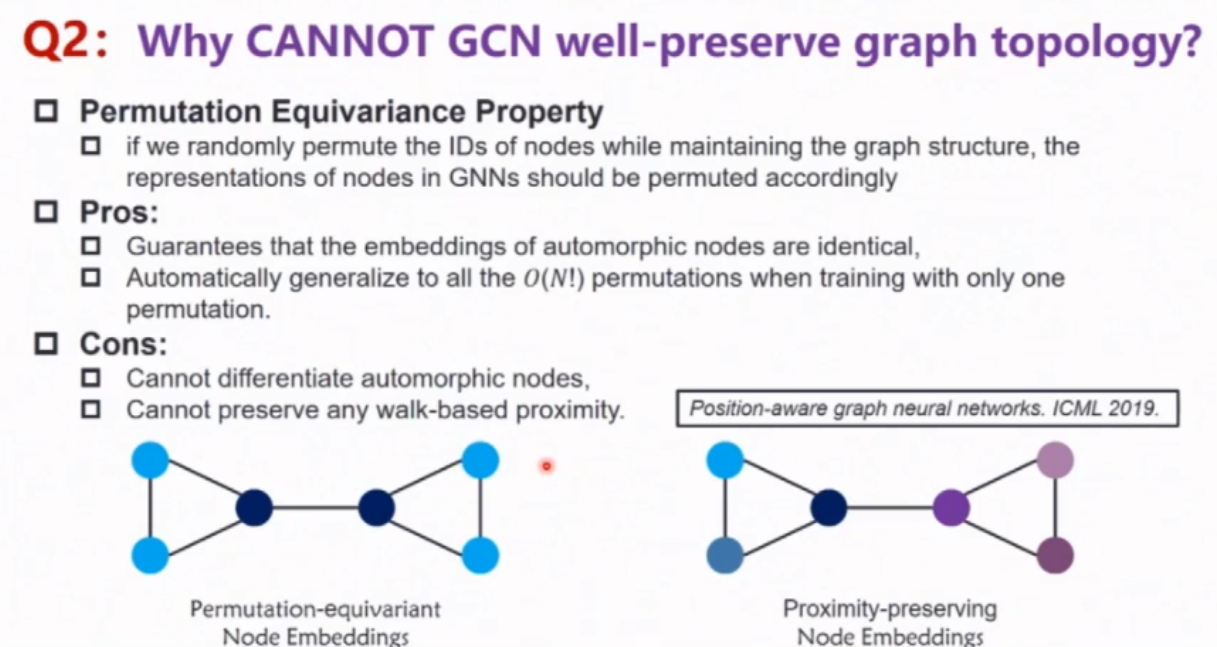

GNN 满足置换不变性,好处是同构的两个节点对应的 topology representation 是一样的。坏处是如果同构的两个节点对应的label不同,这就超出了GNN的表达能力。

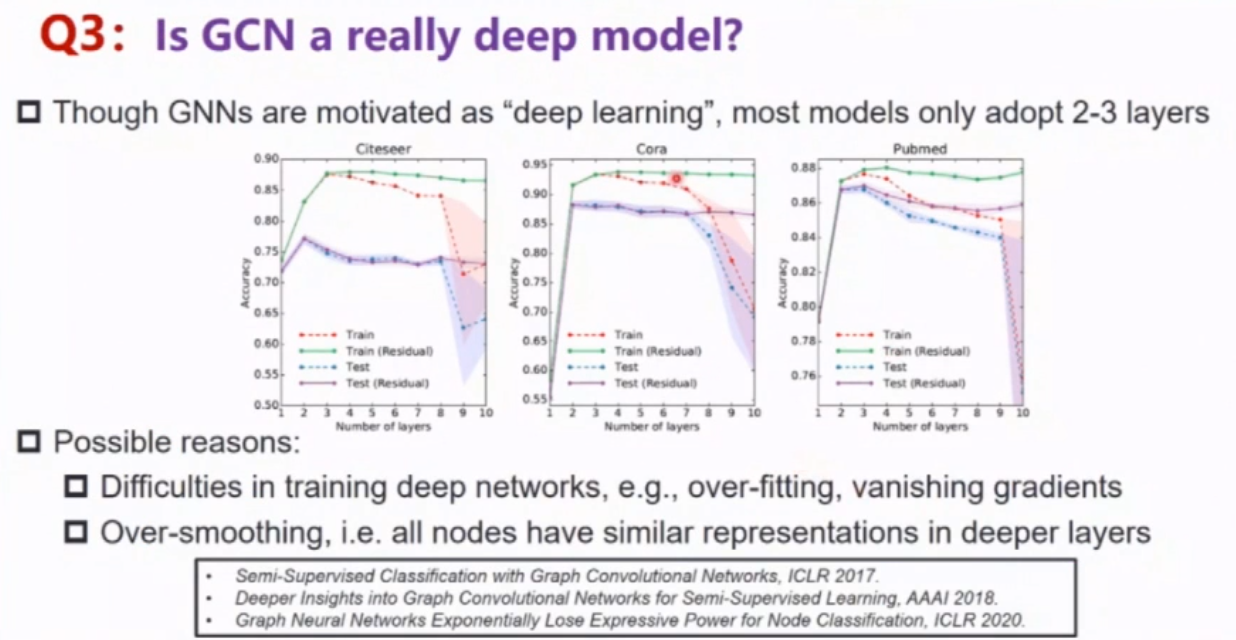

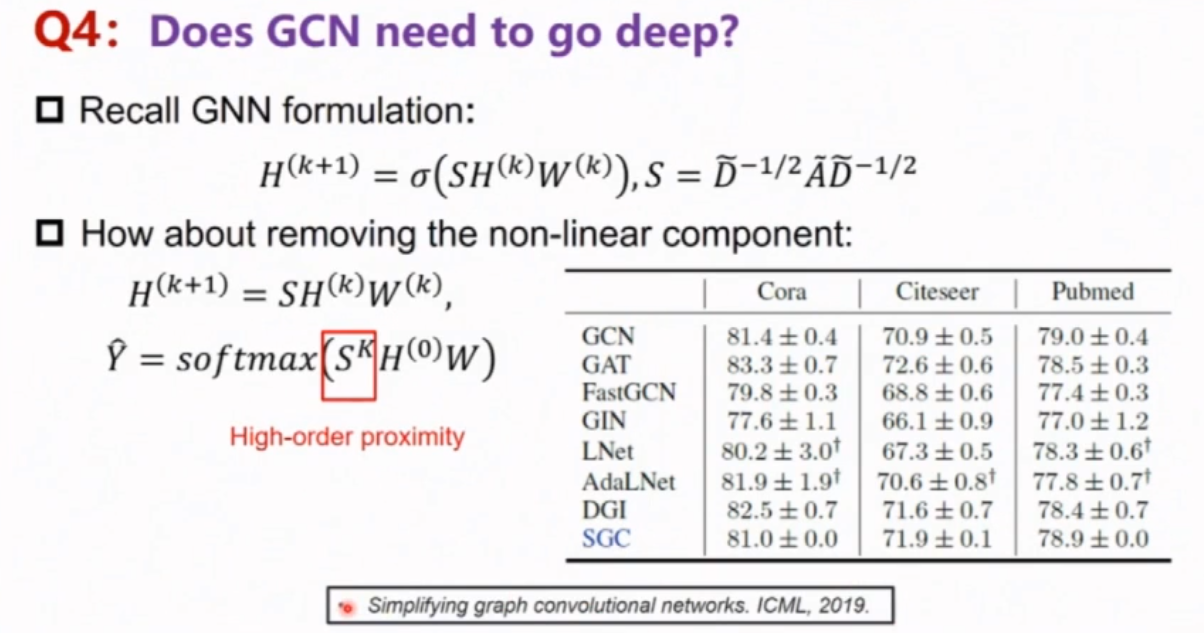

Deep GNN 训练存在问题,在图像数据集下有涨点,但是在更general graph setting的数据集下没有performance boost。

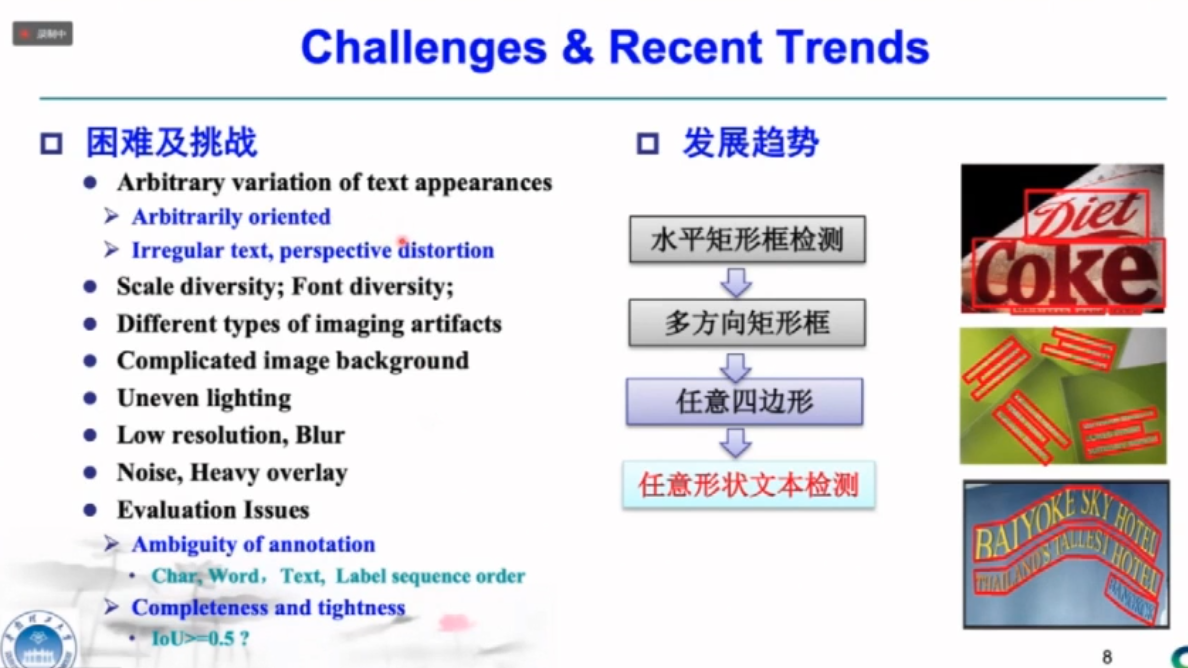

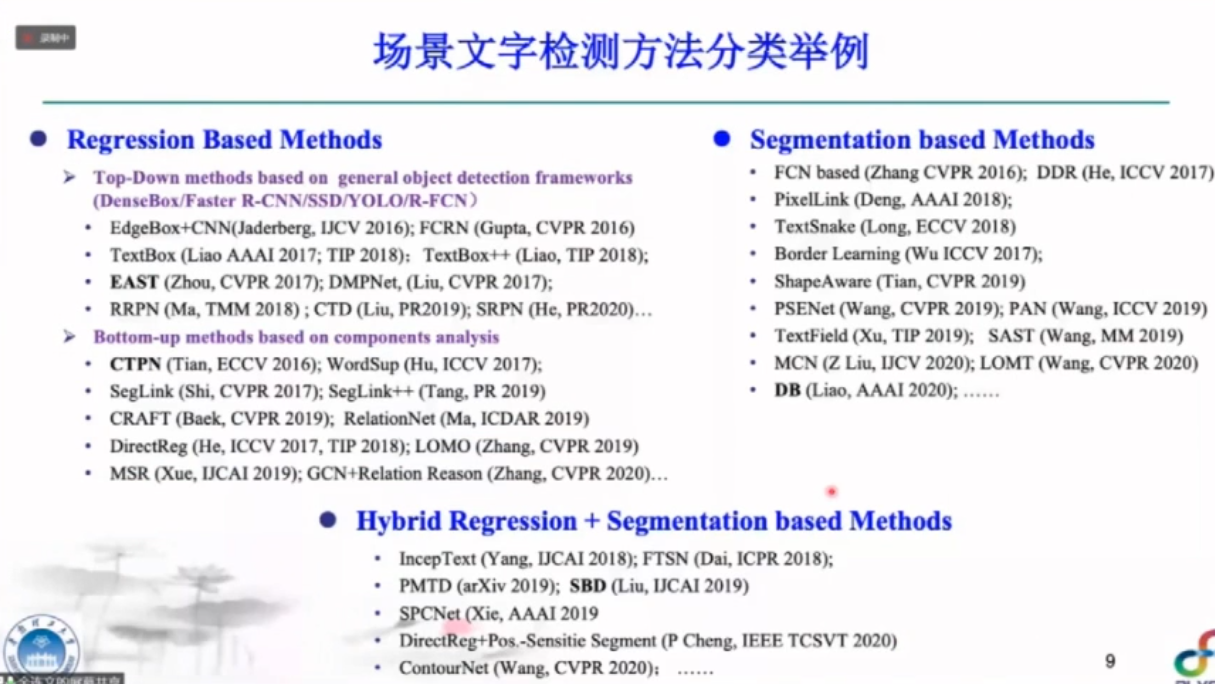

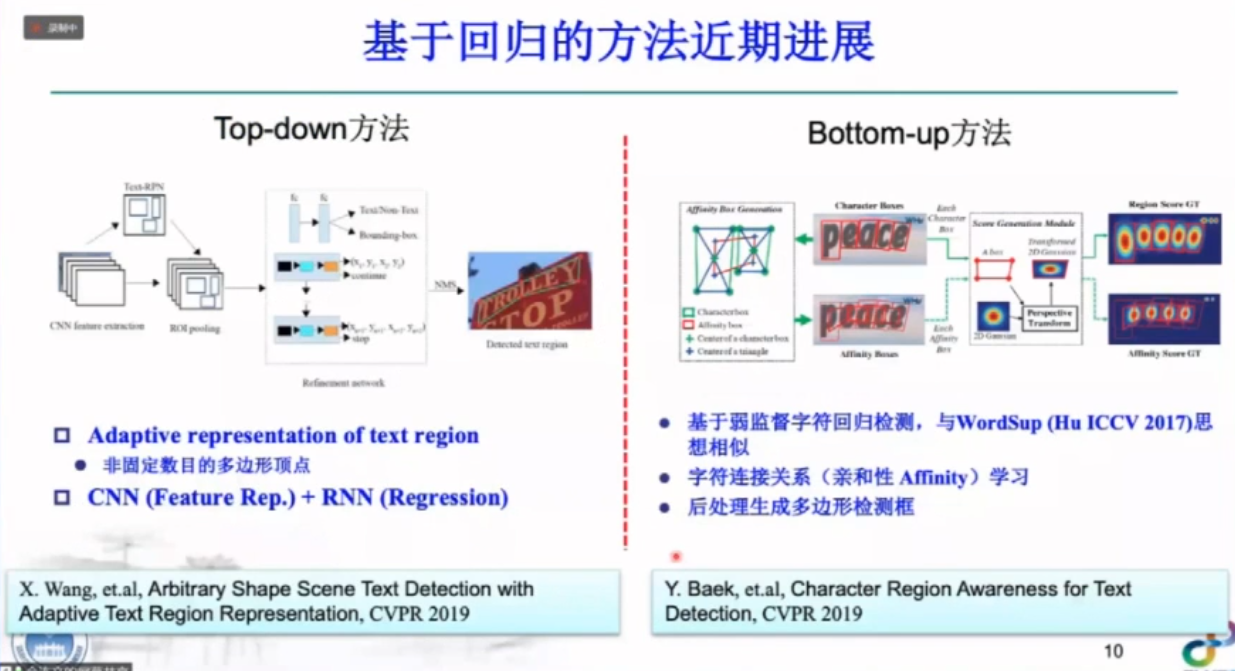

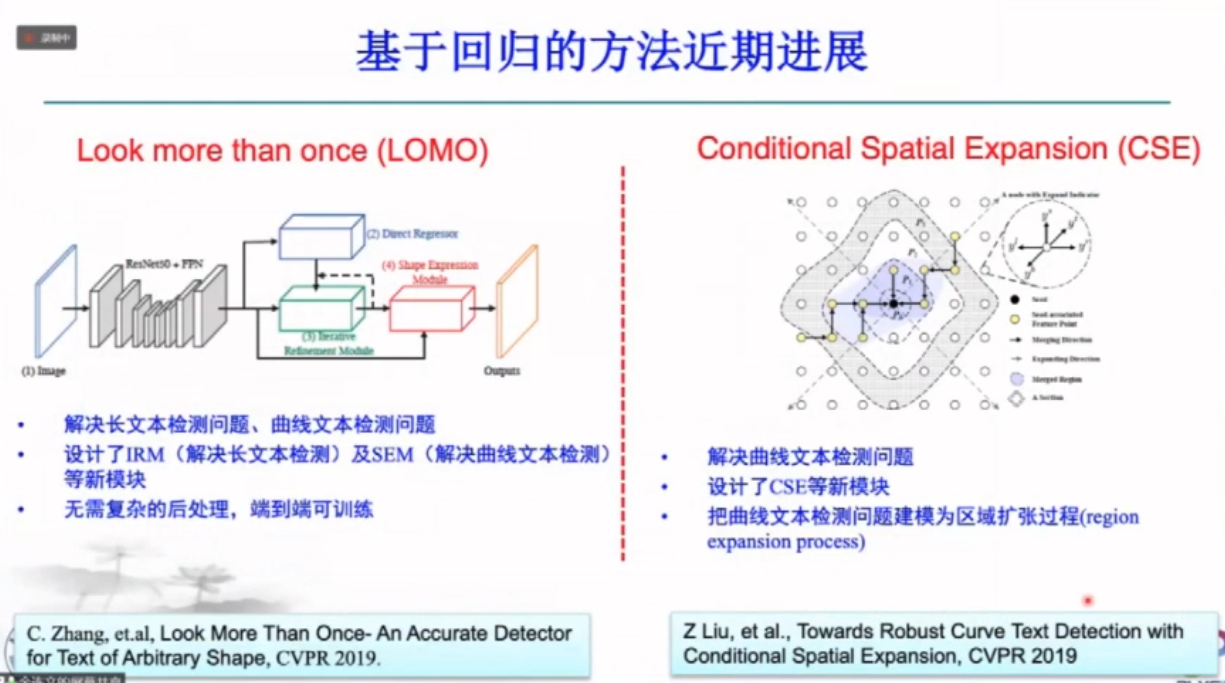

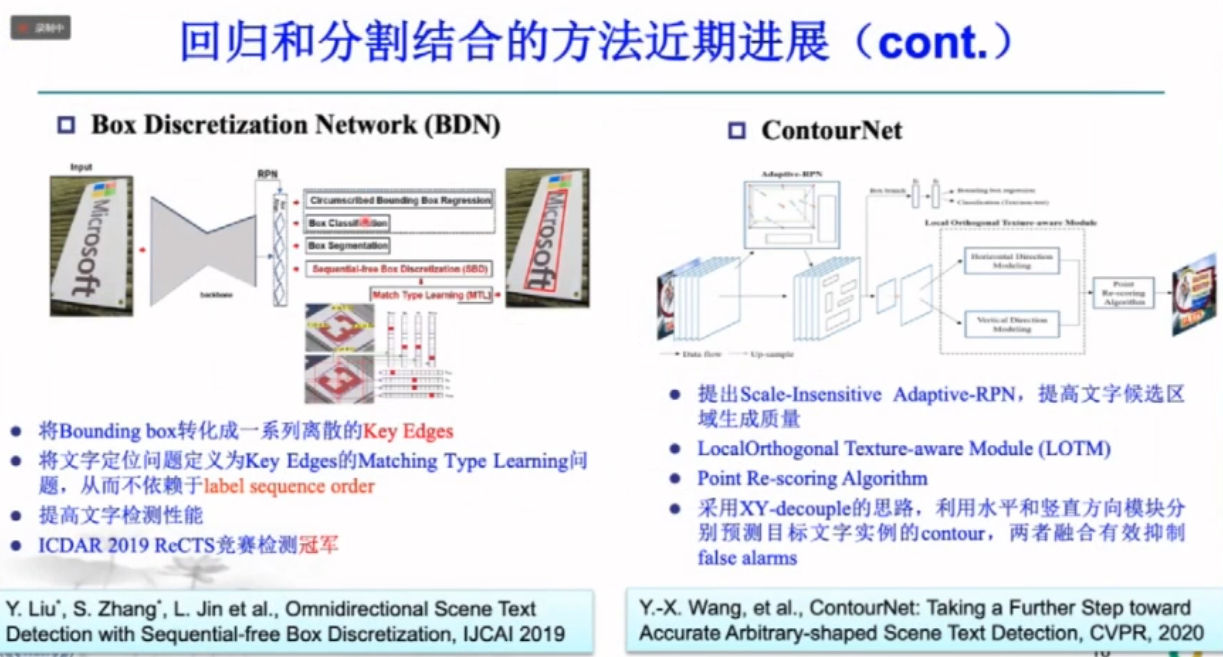

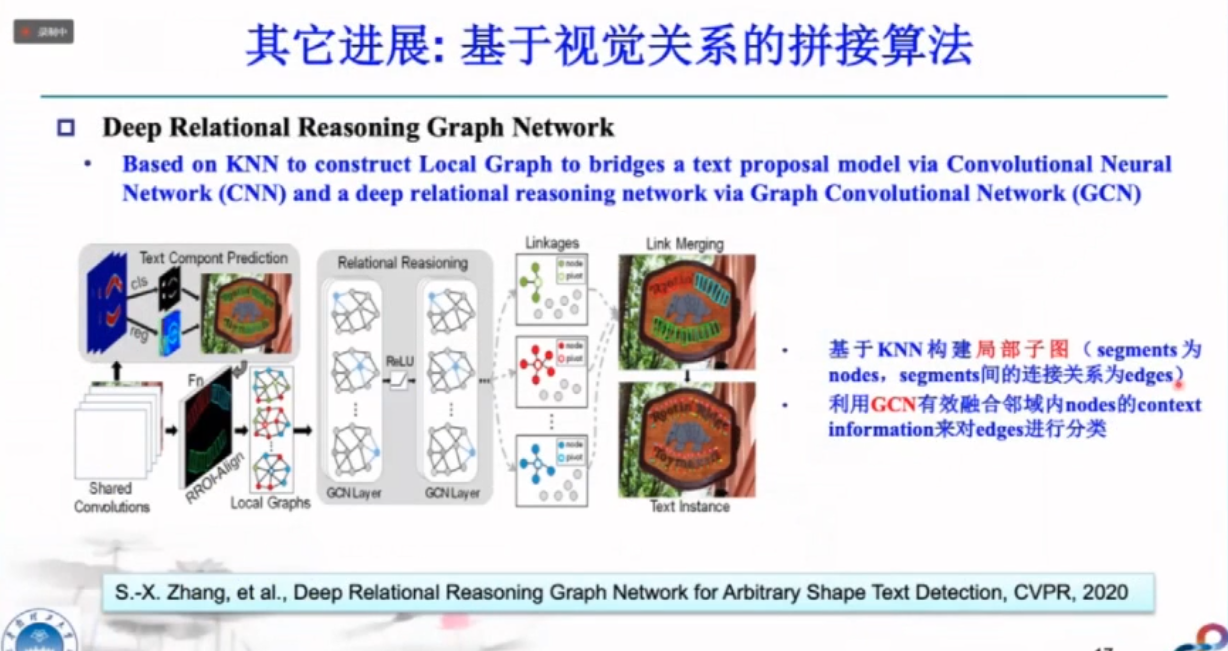

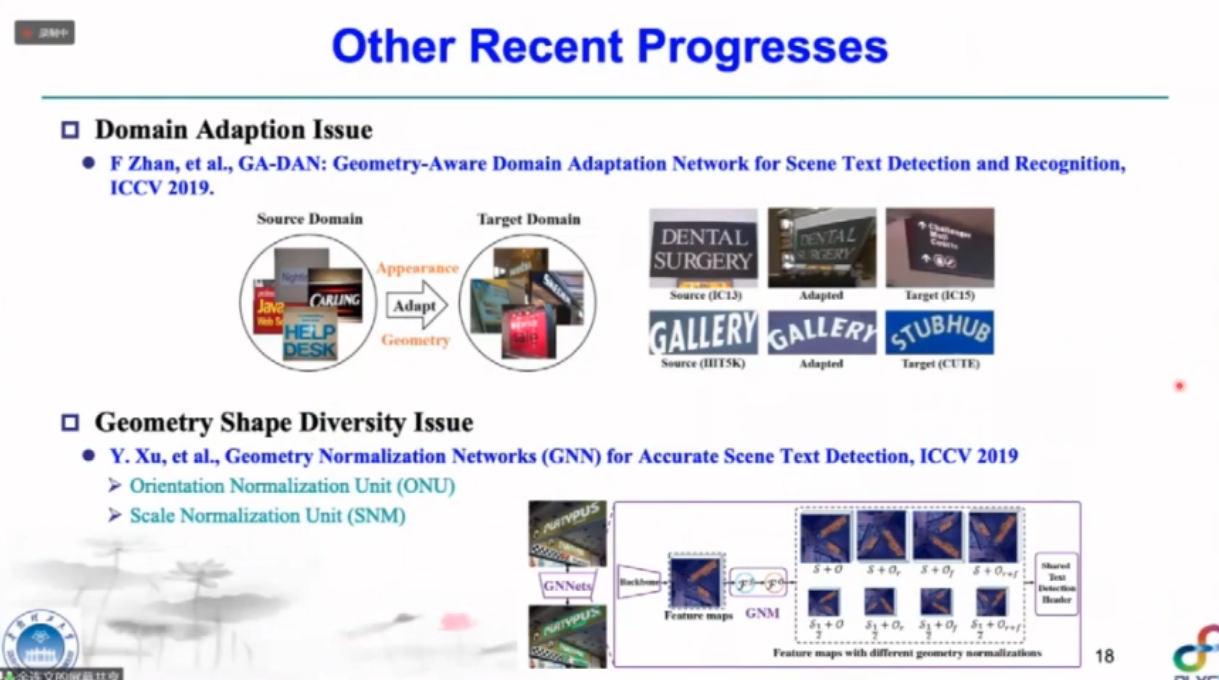

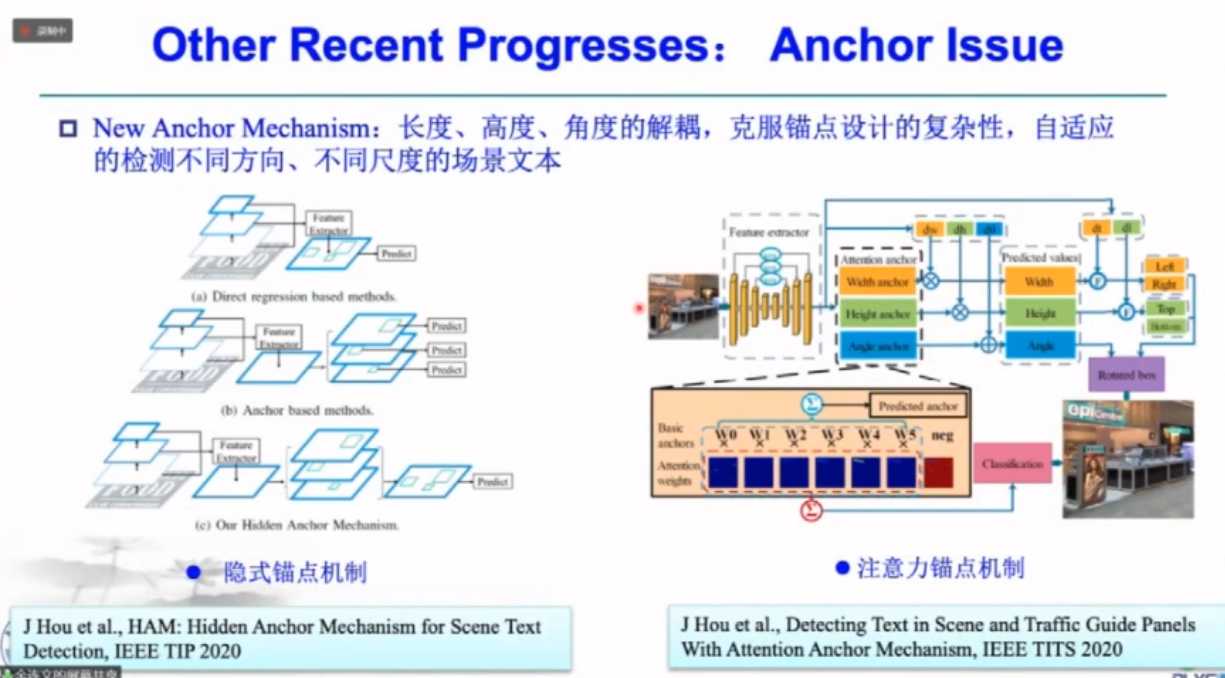

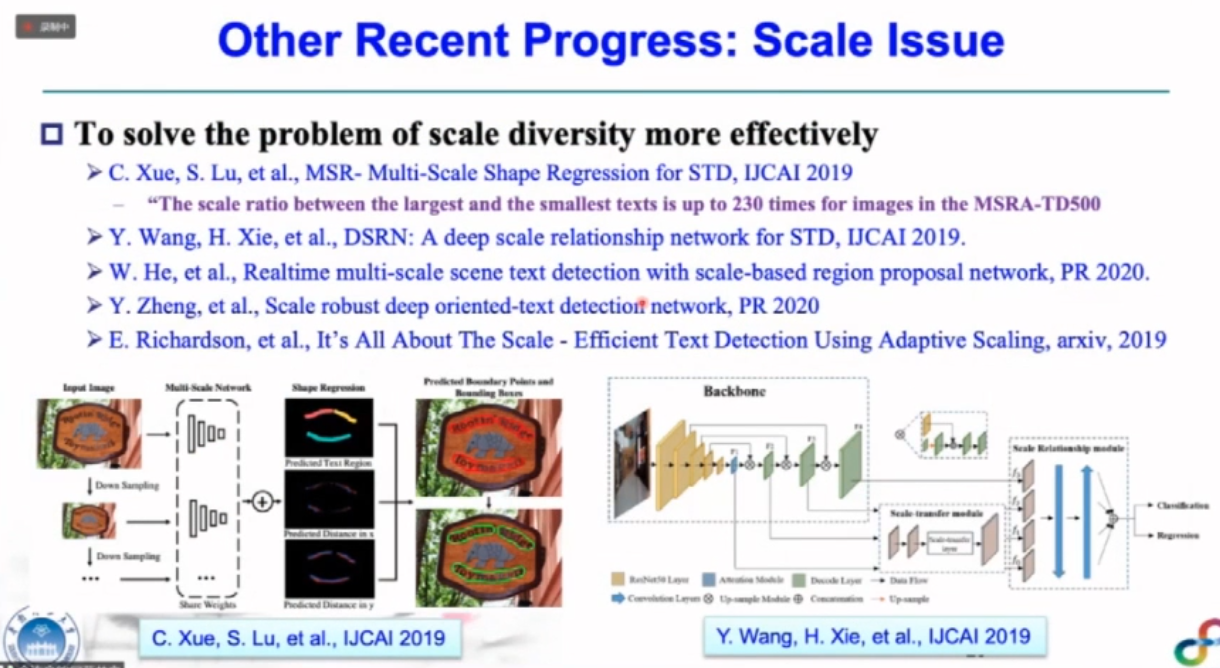

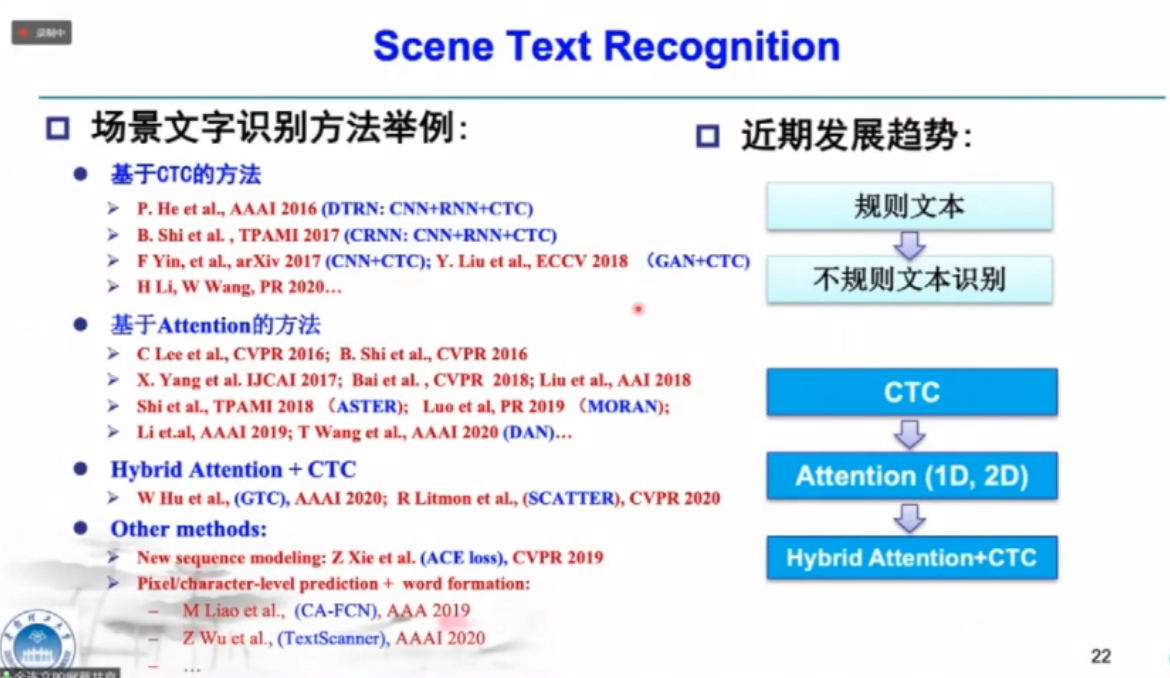

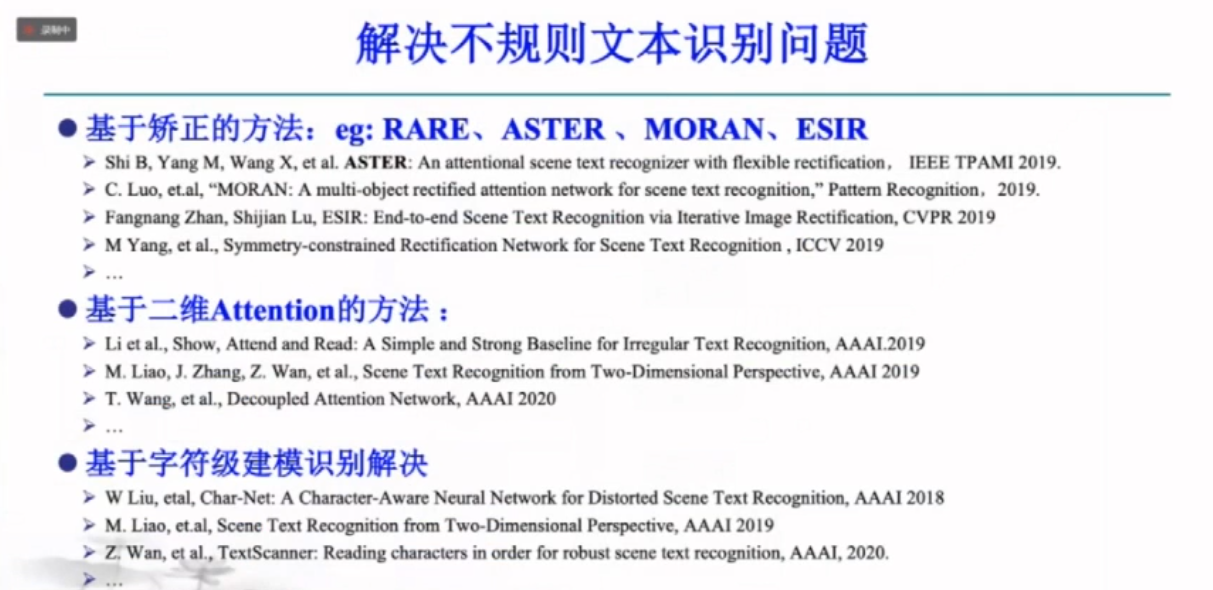

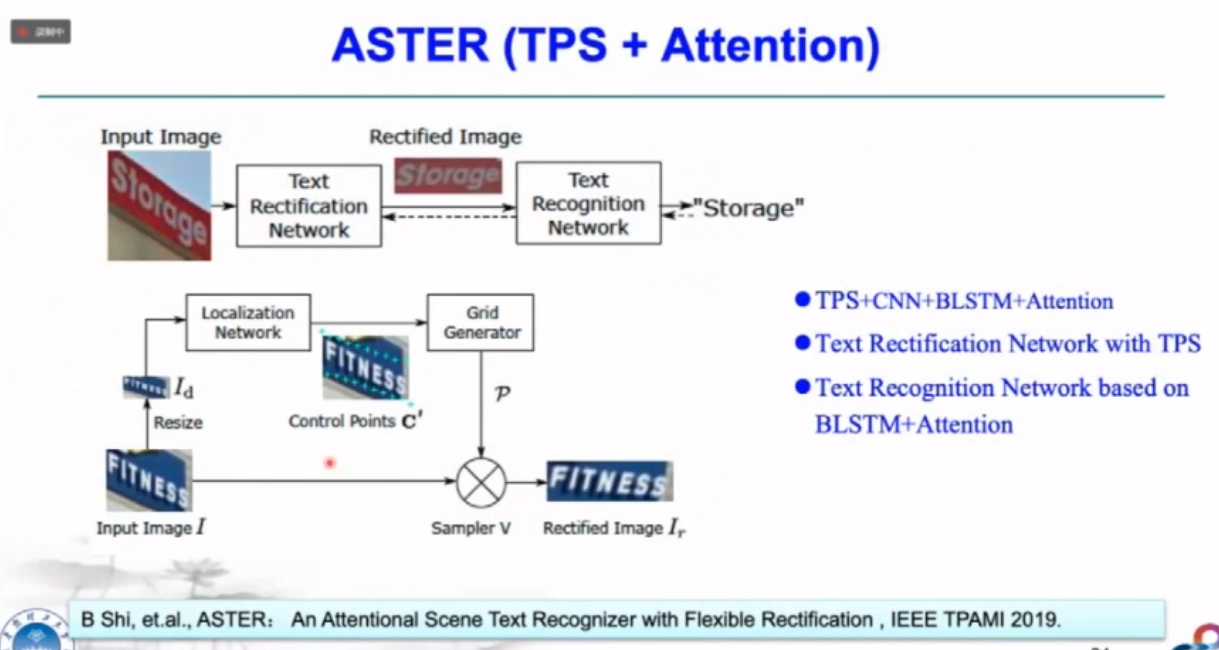

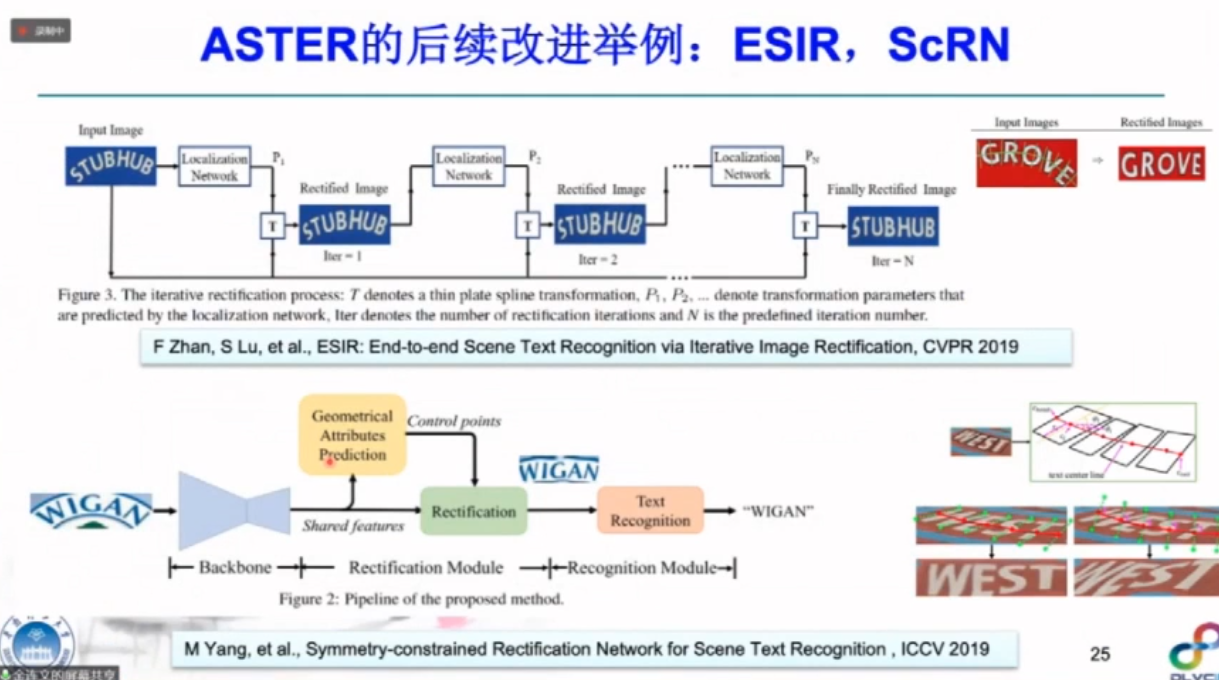

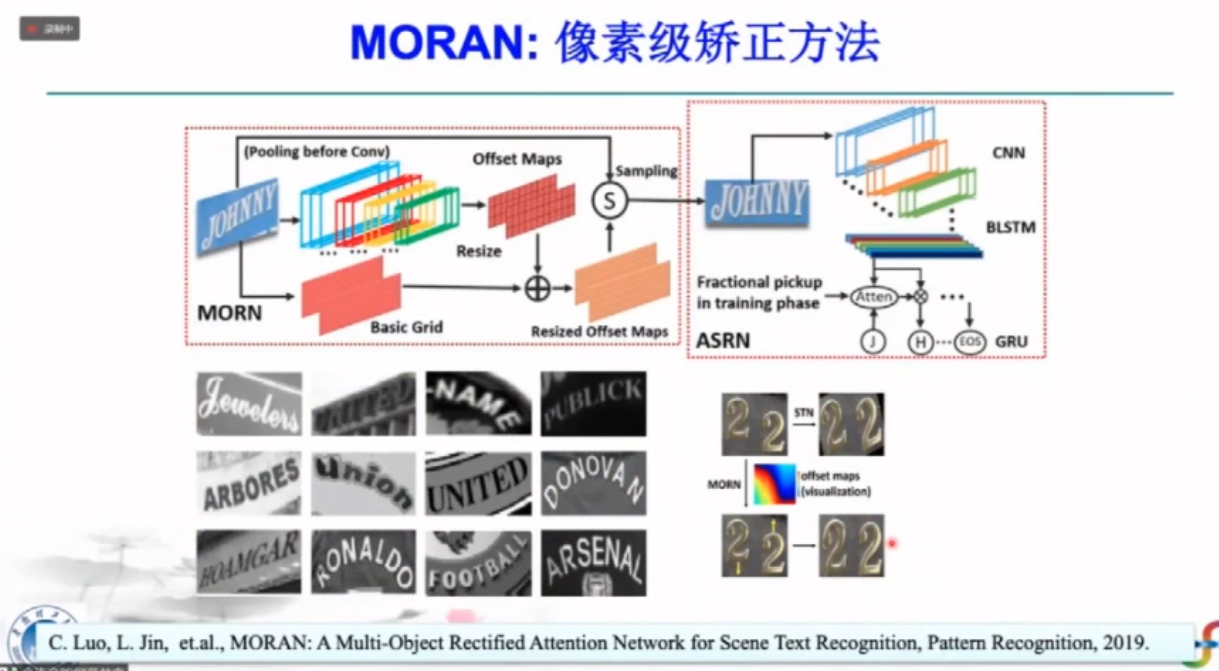

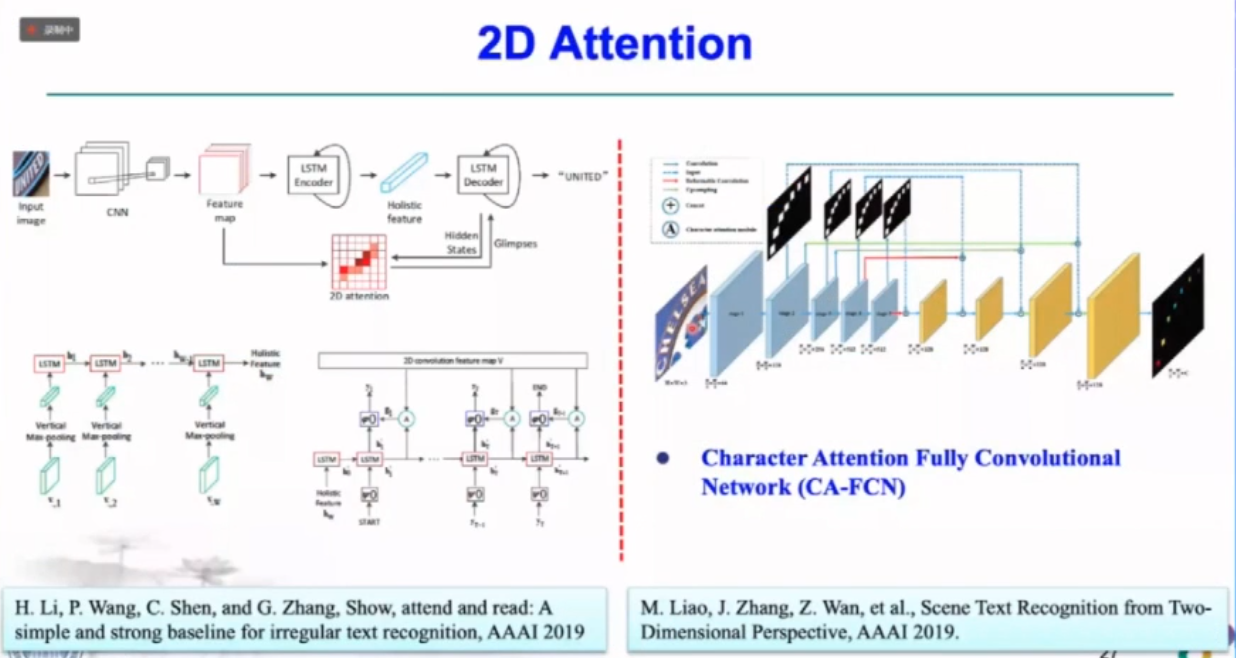

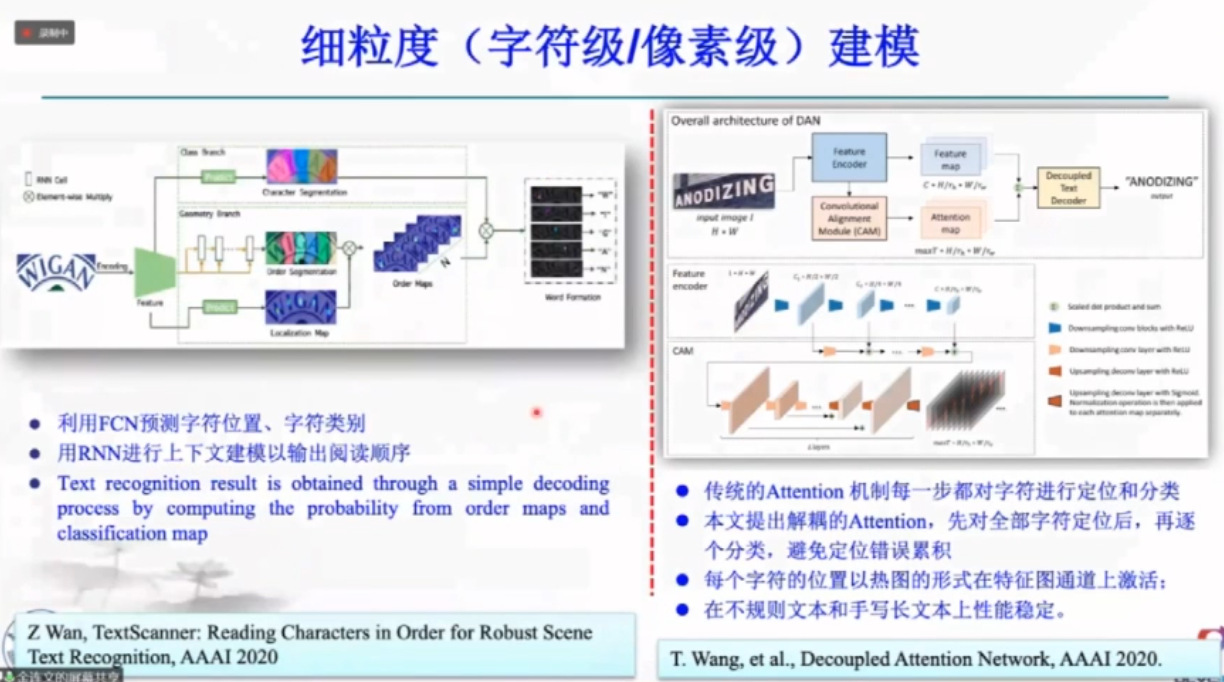

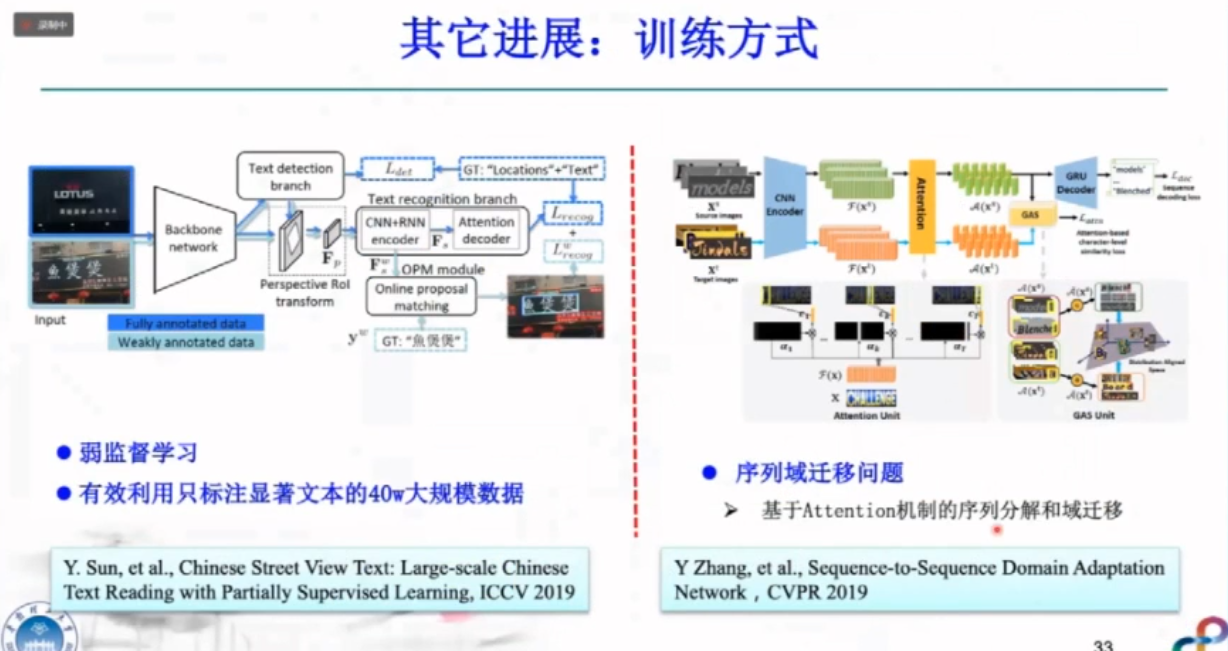

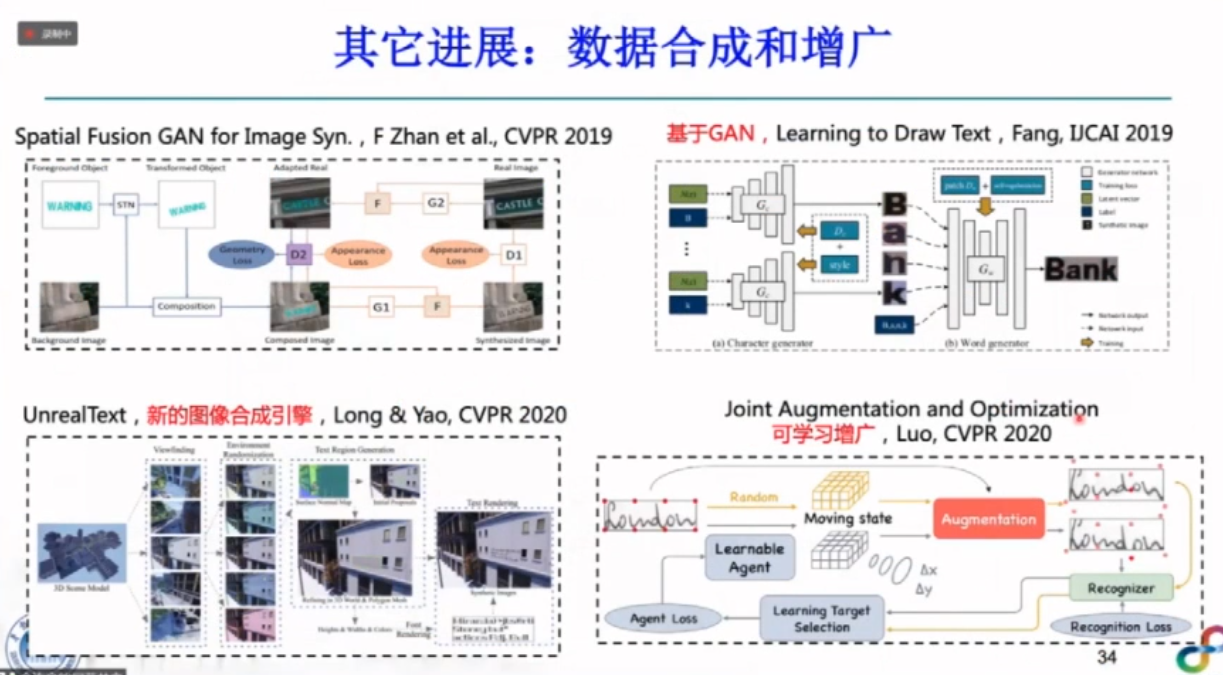

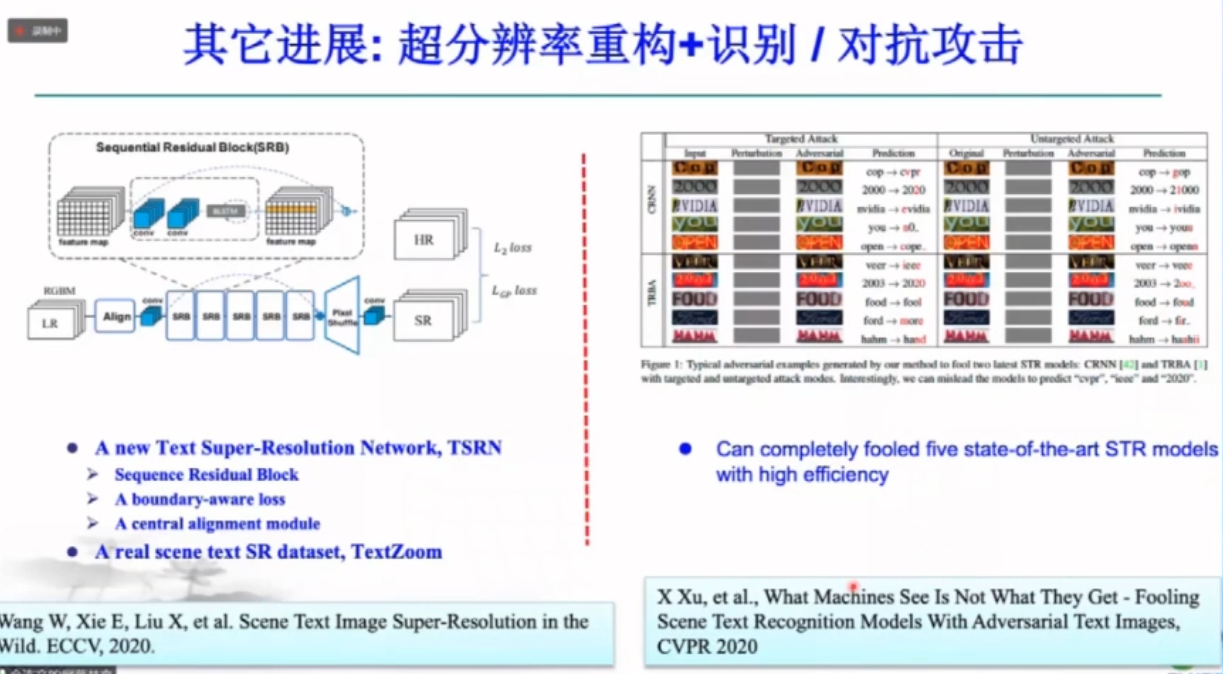

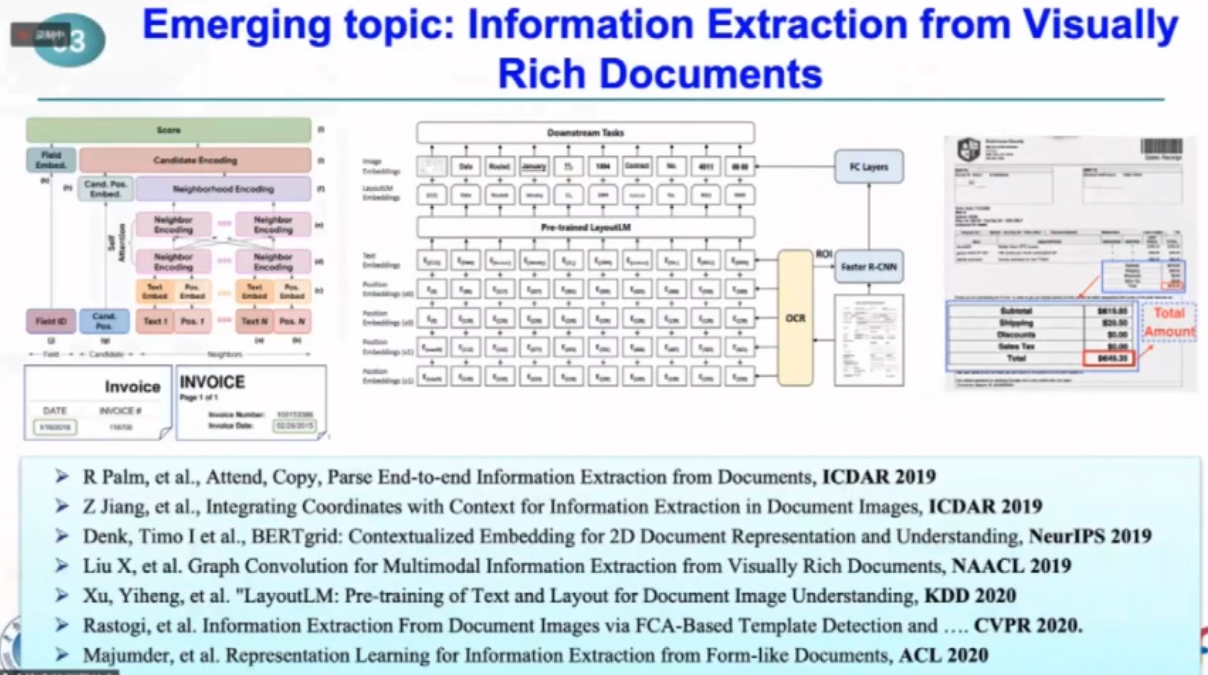

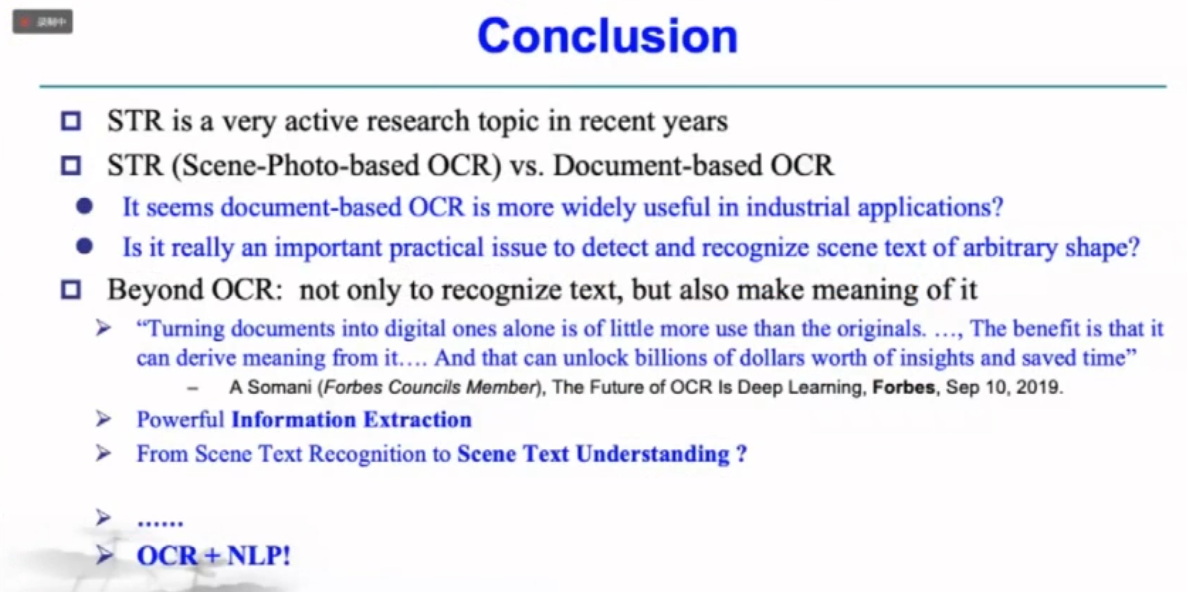

场景文字检测与识别

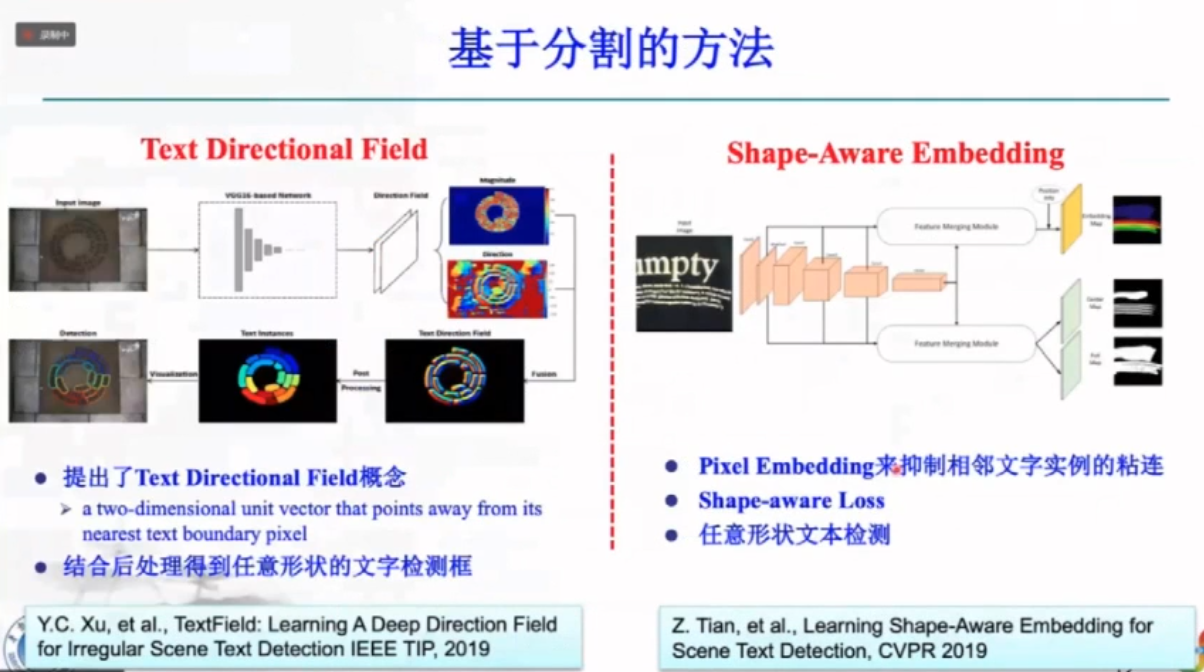

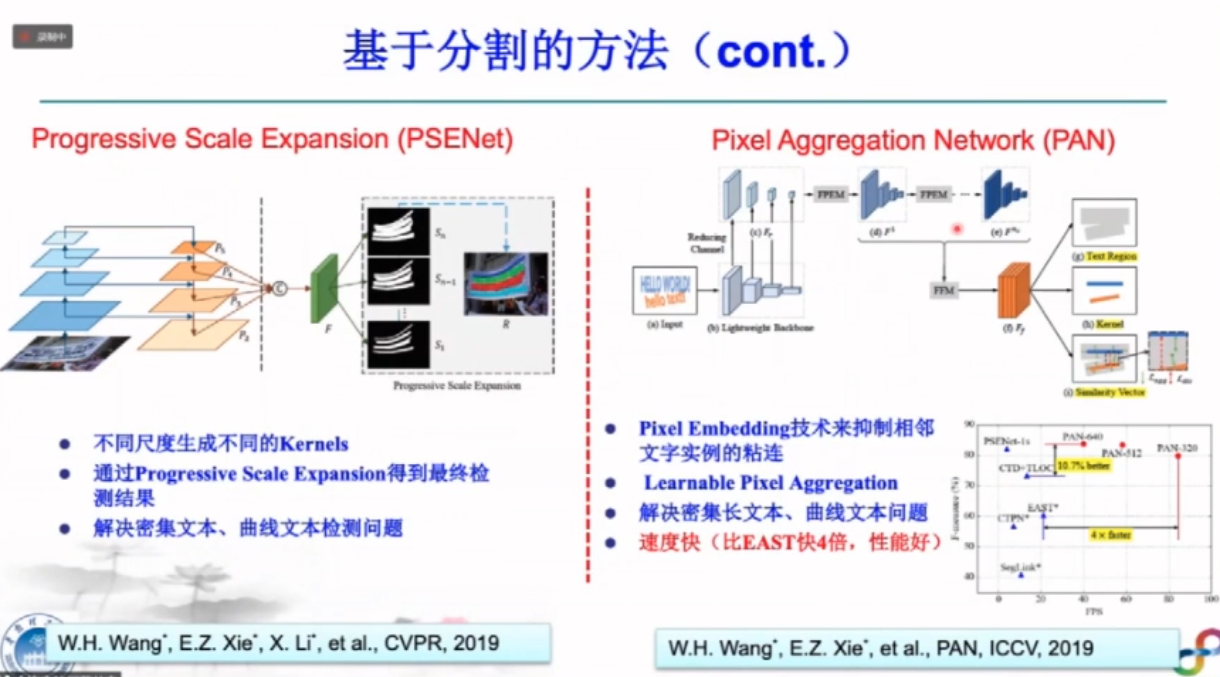

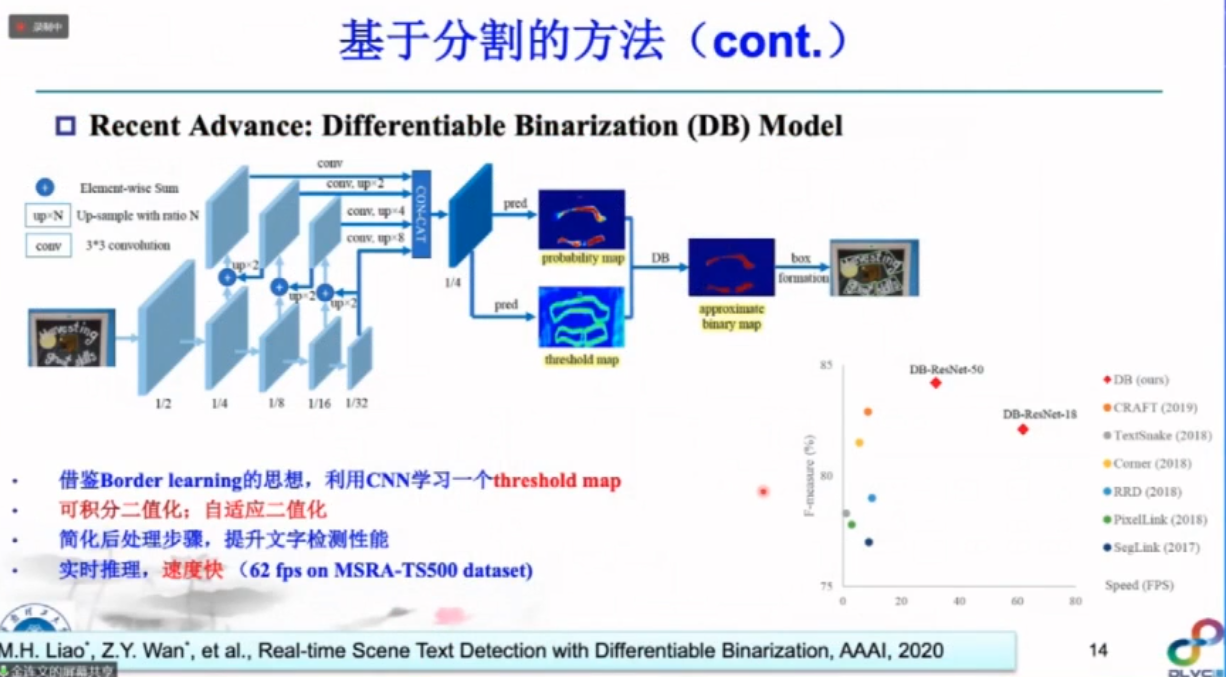

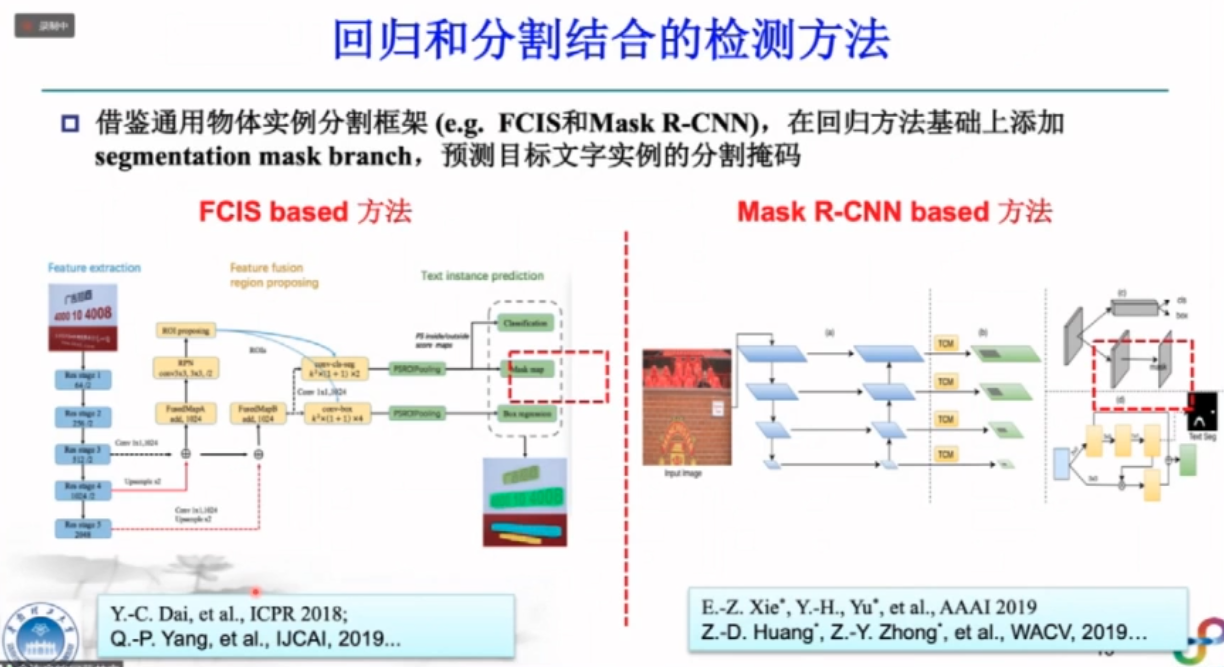

场景文字检测

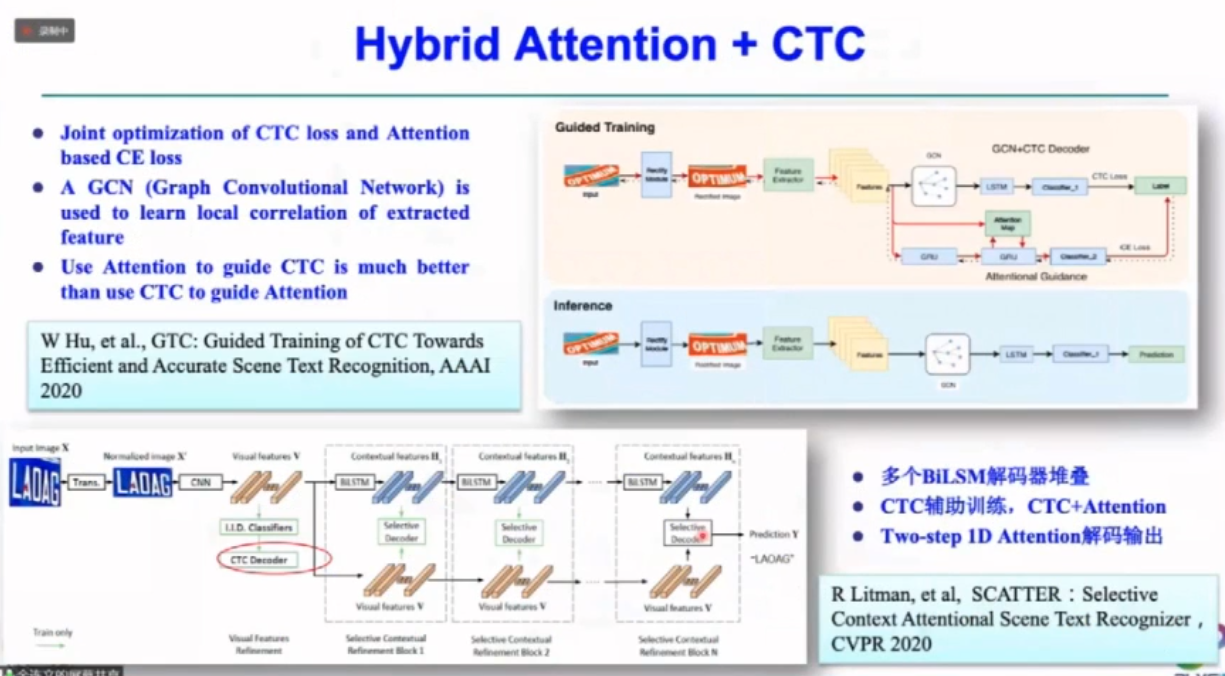

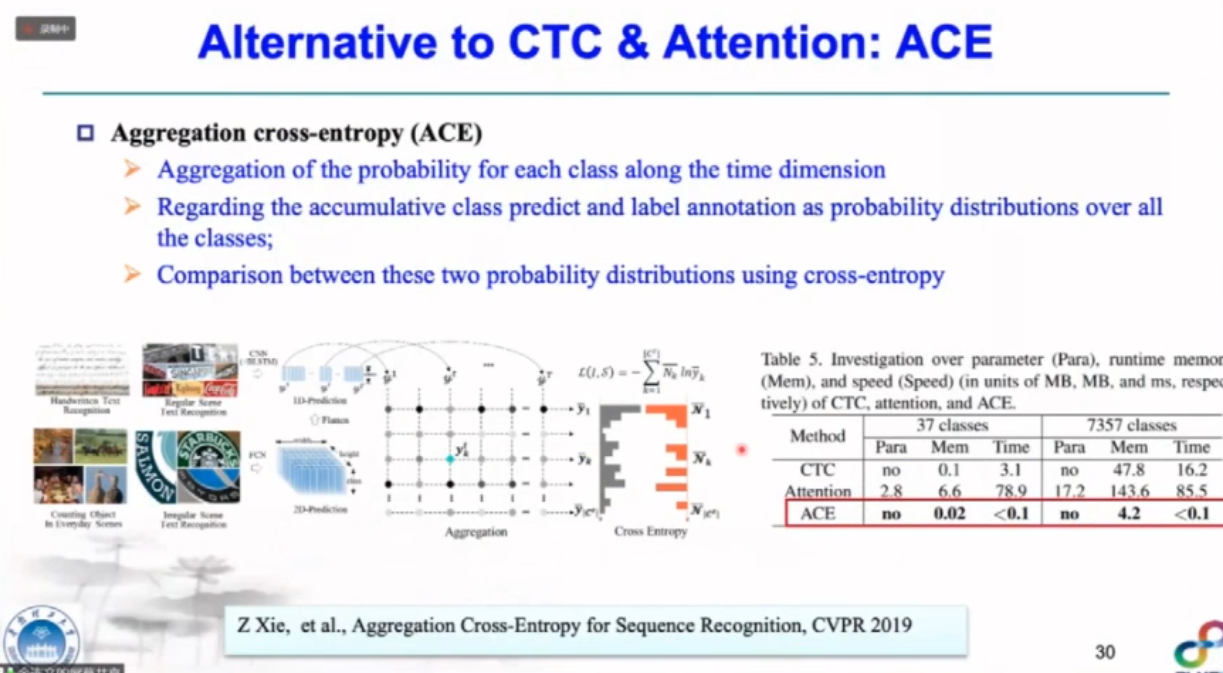

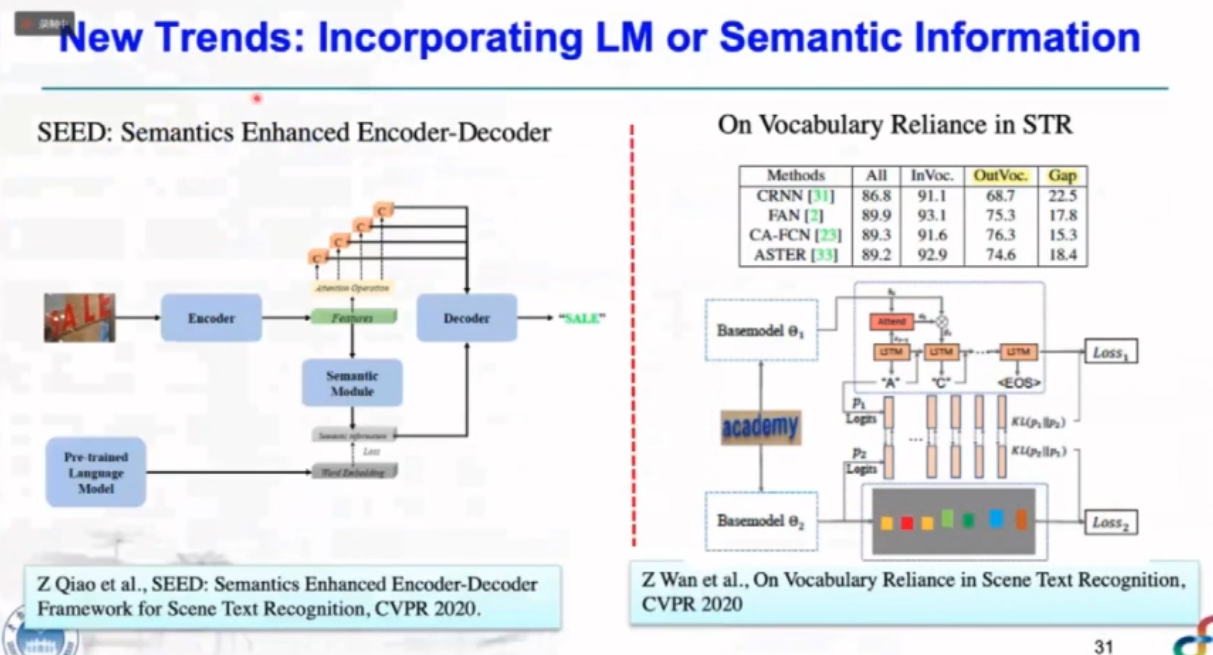

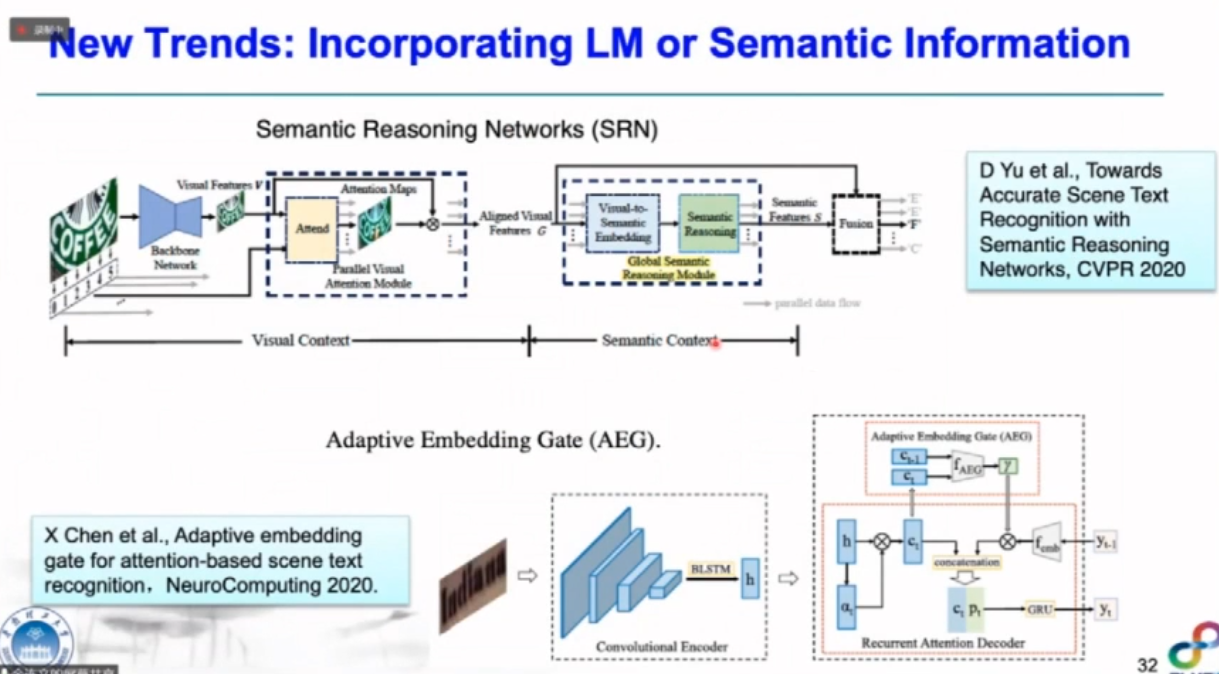

场景文字识别

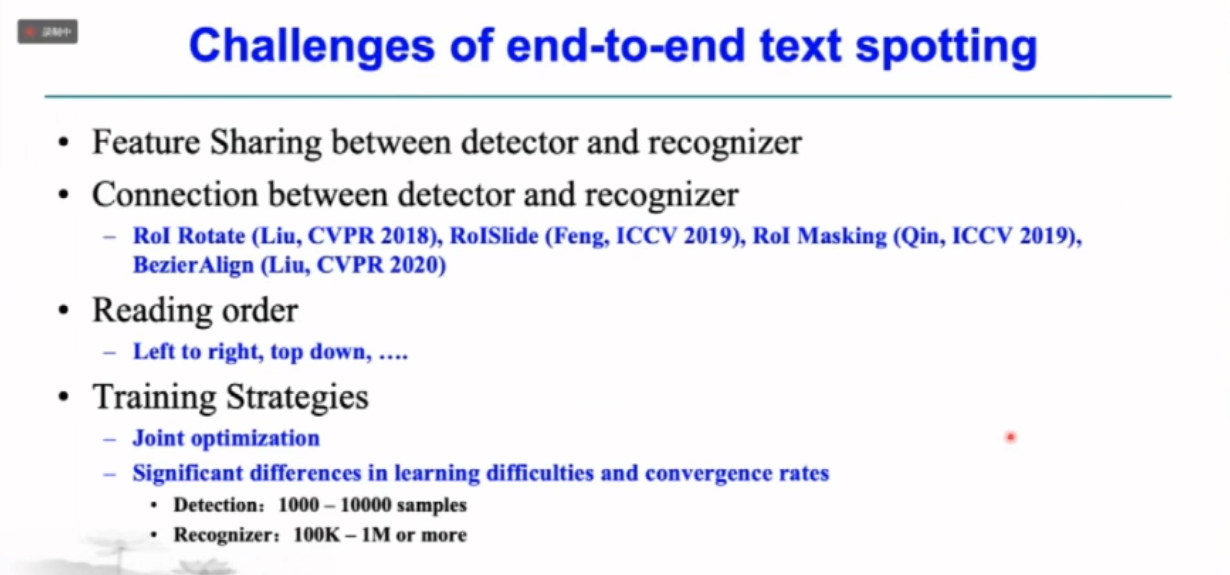

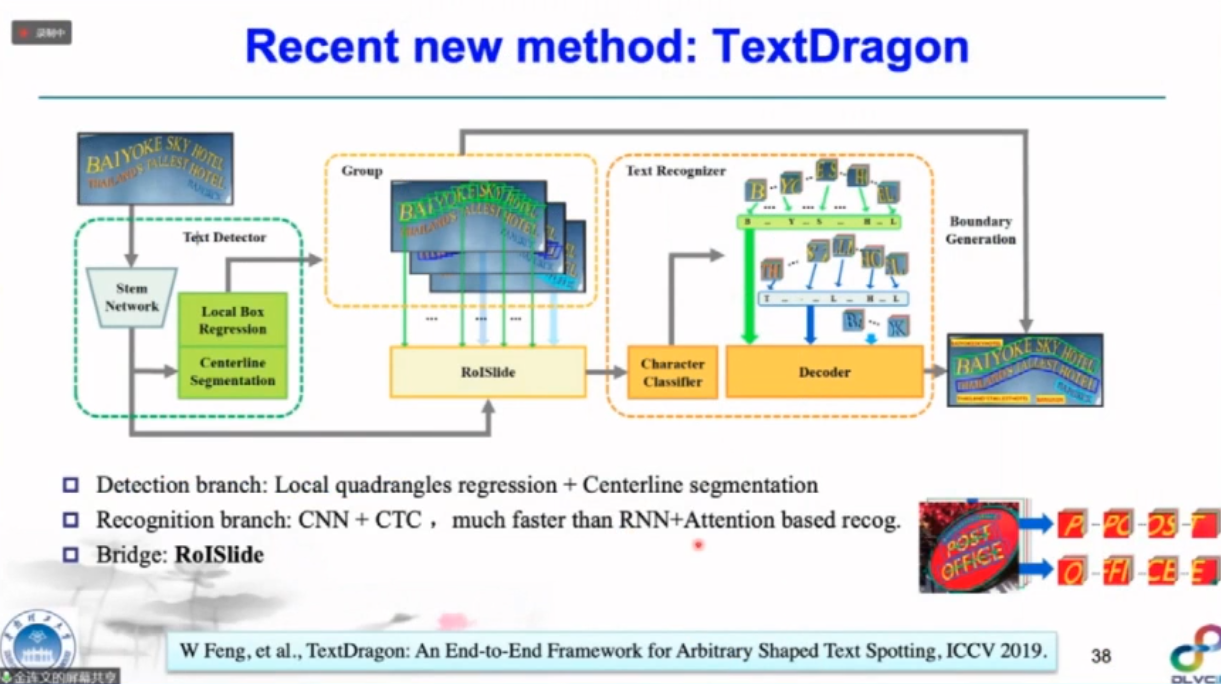

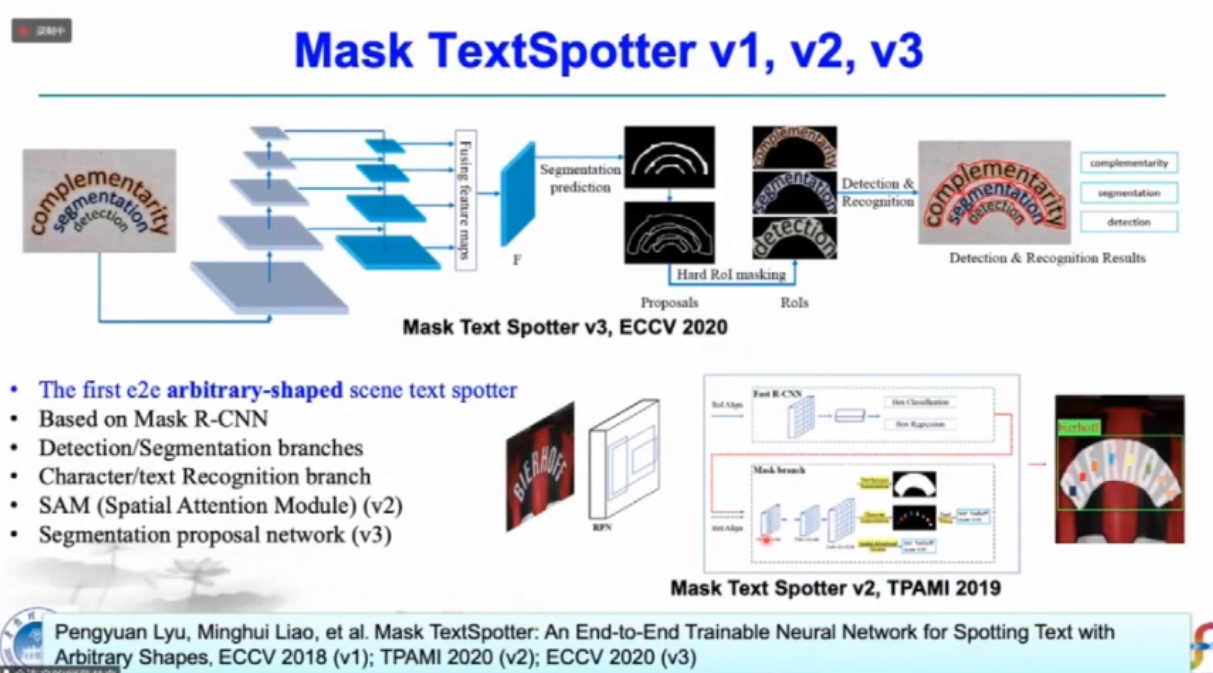

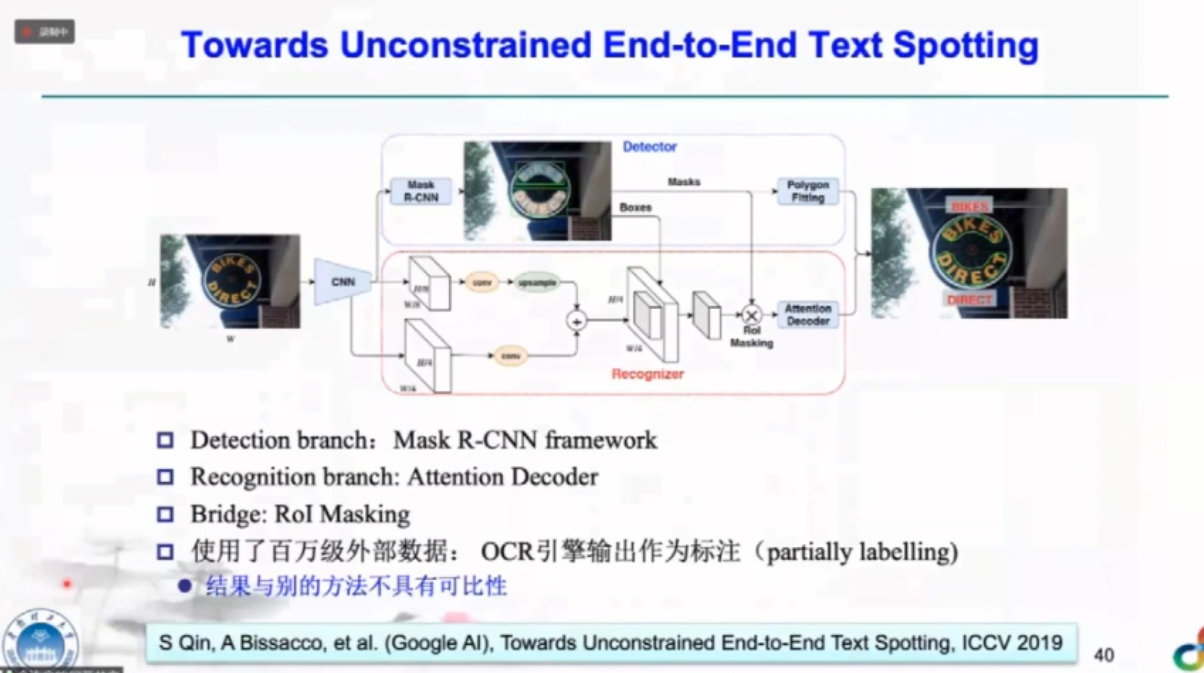

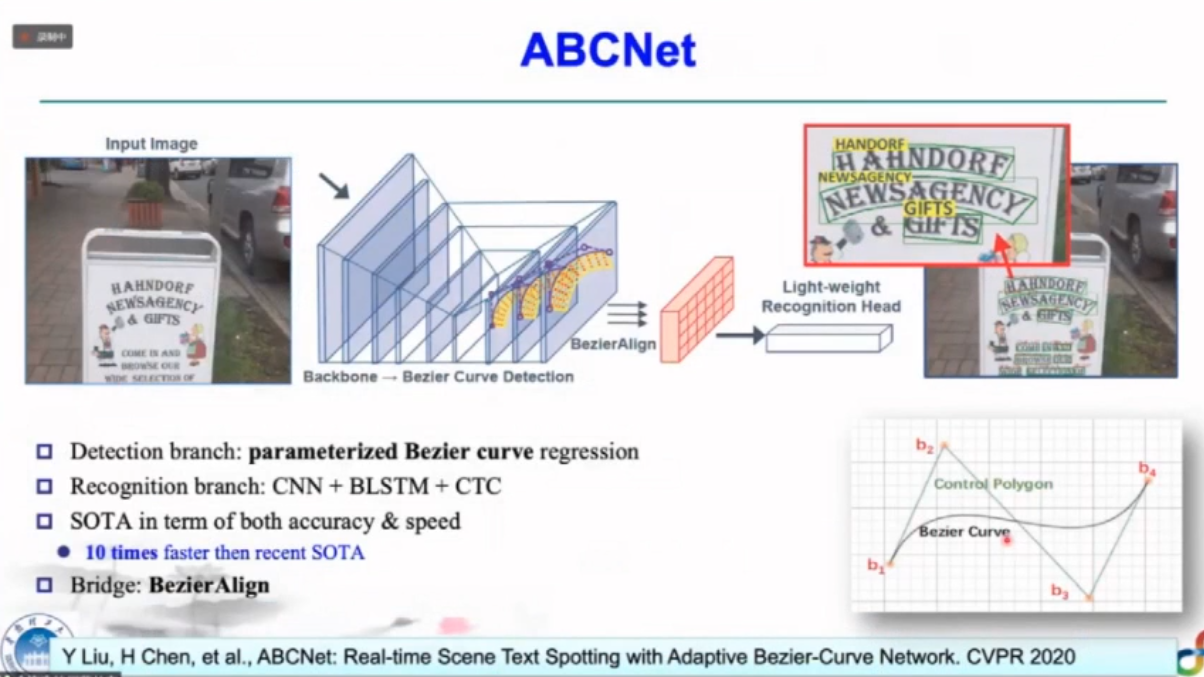

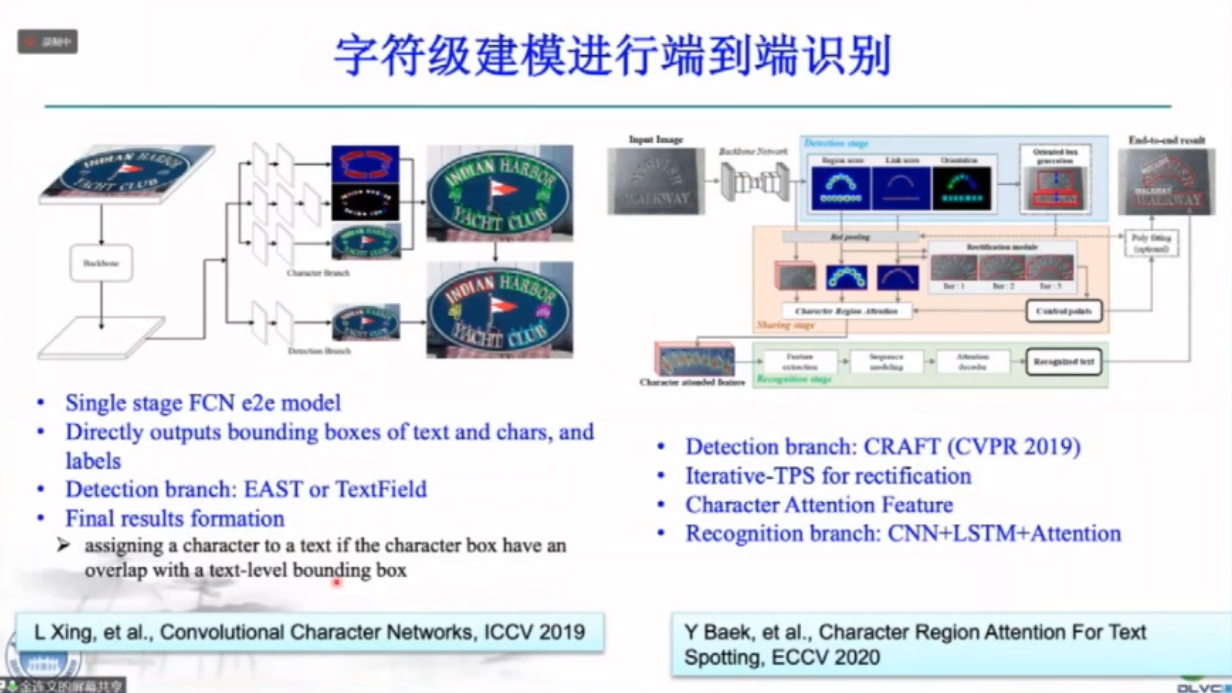

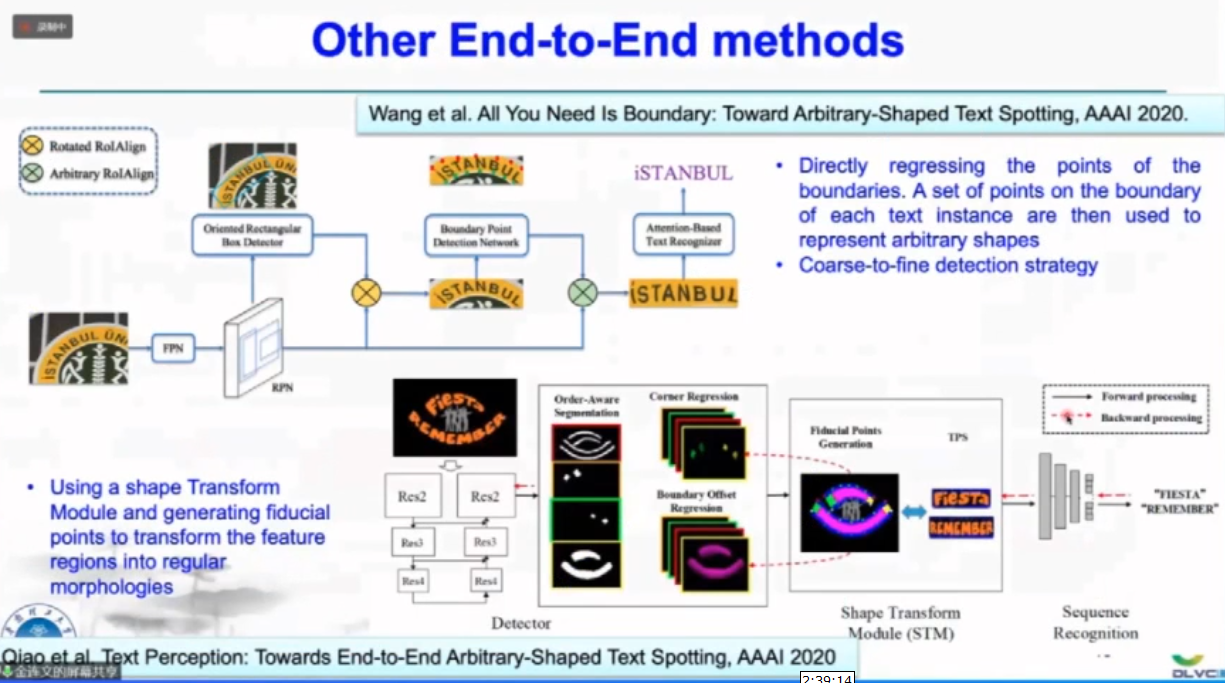

端到端场景文字识别

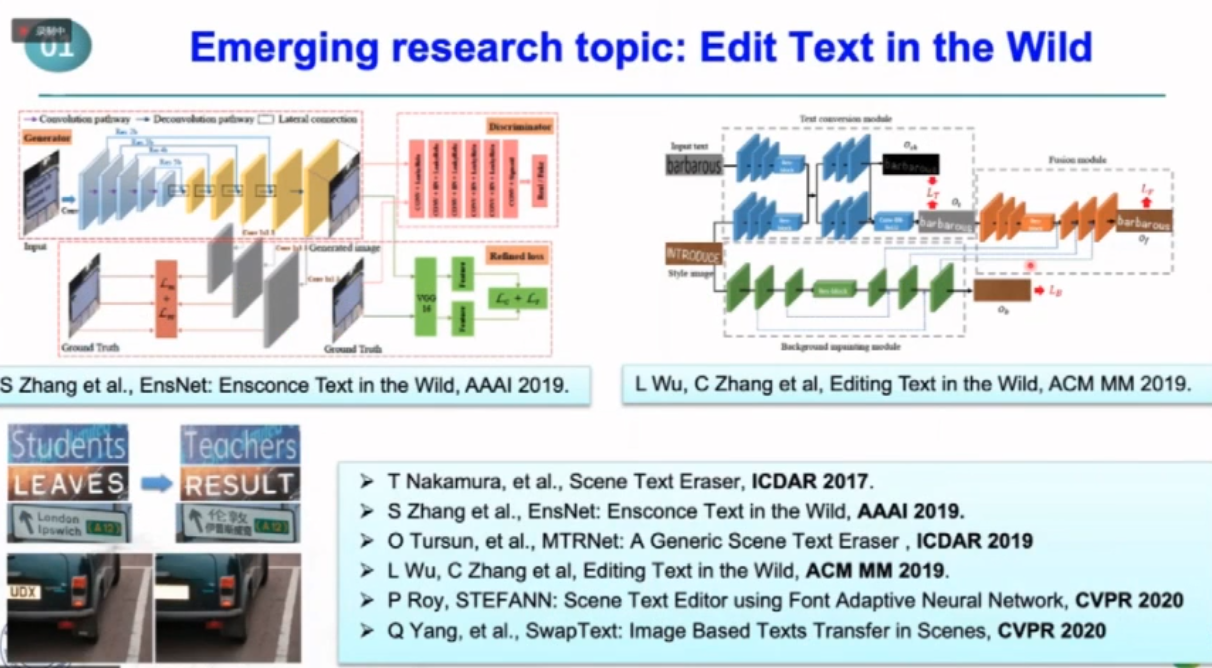

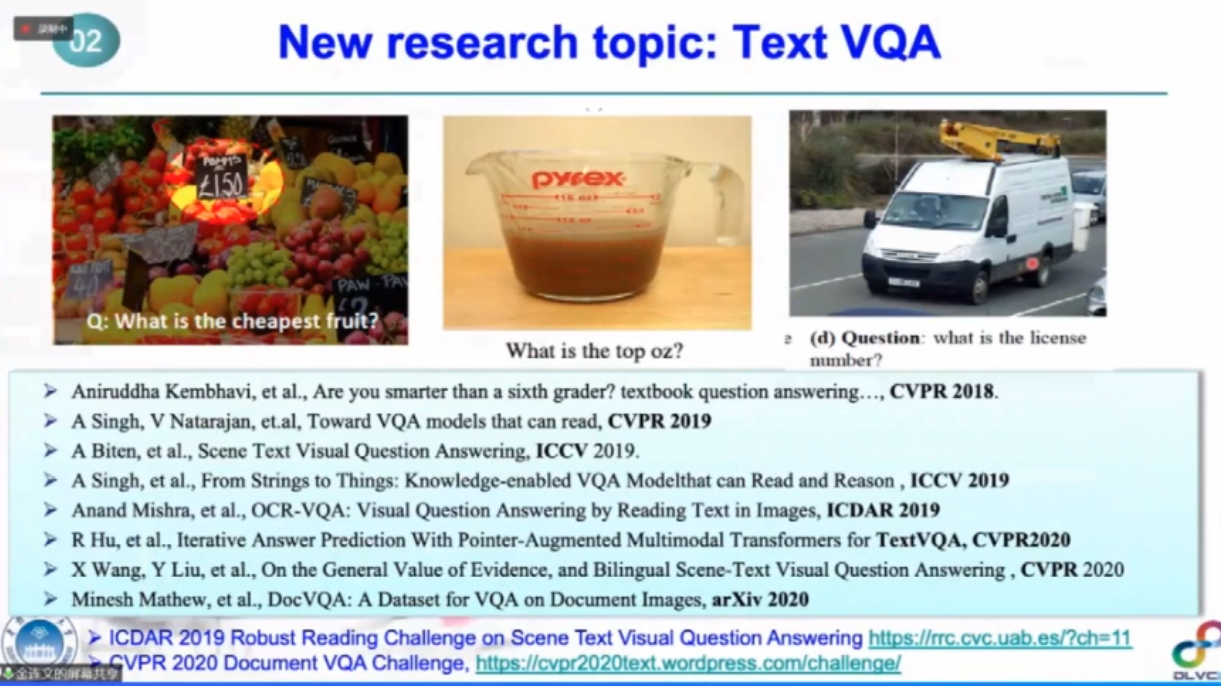

未来展望

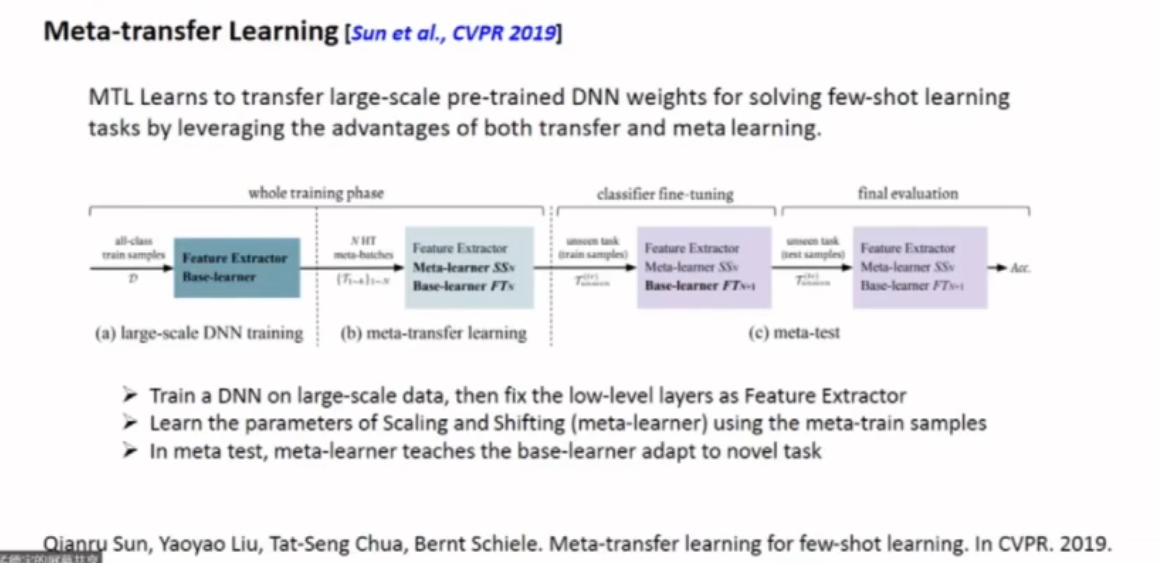

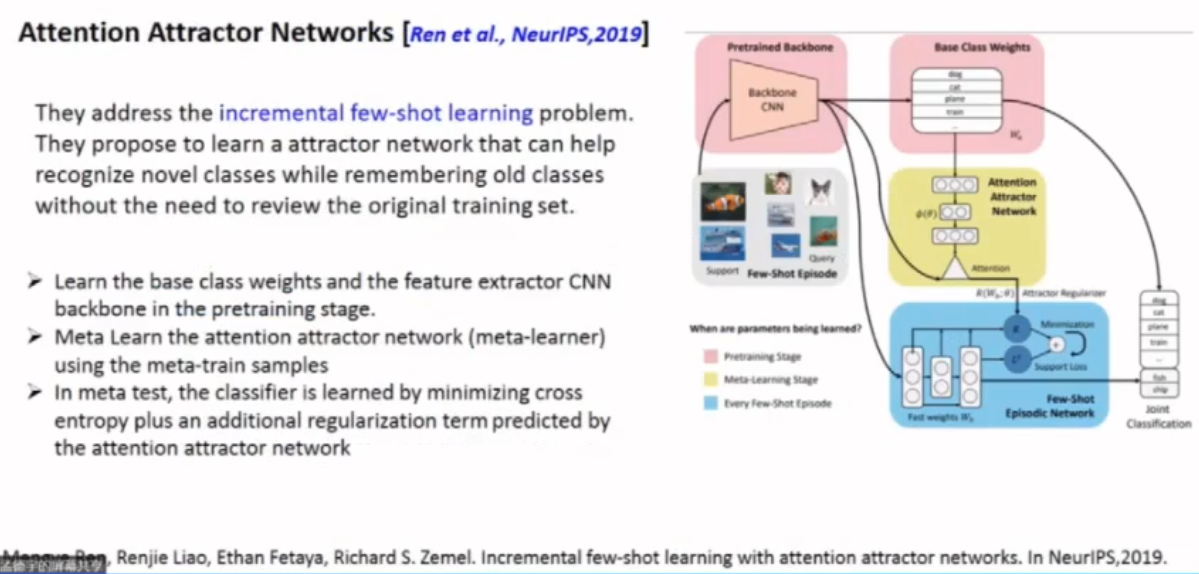

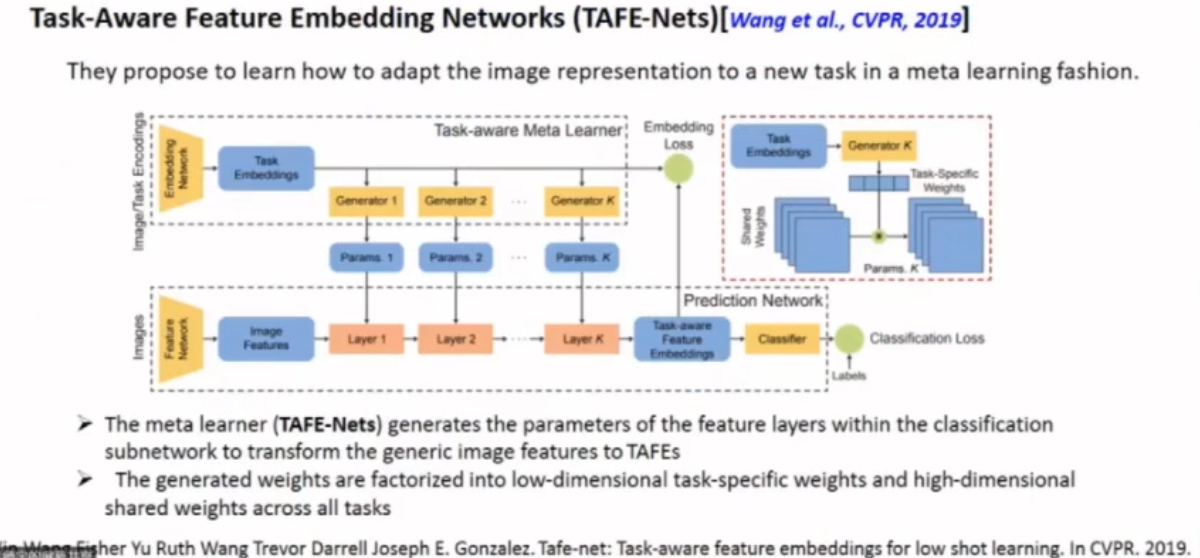

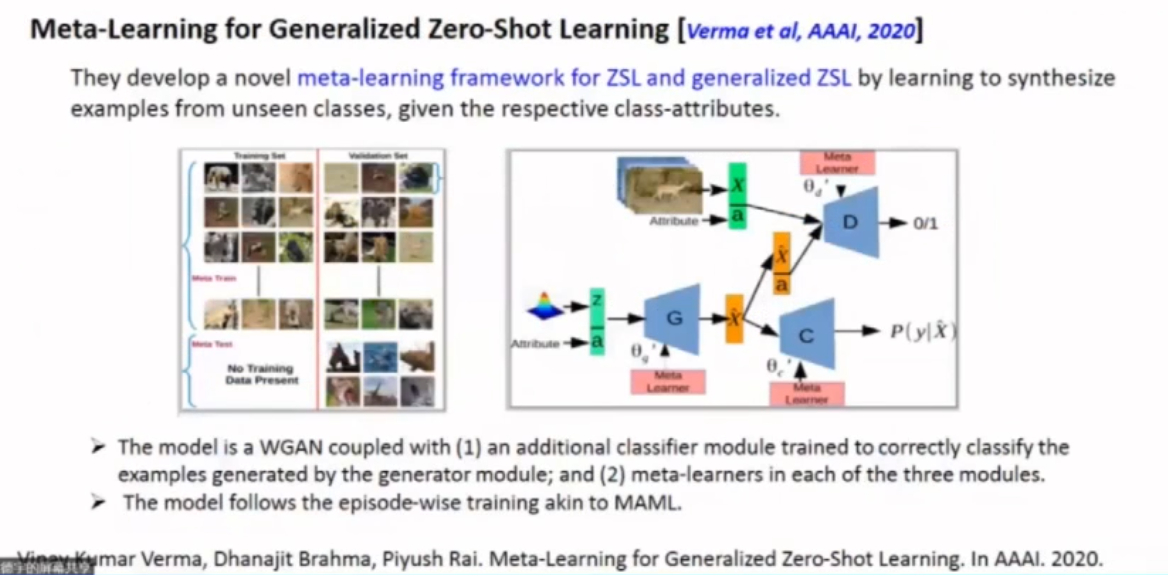

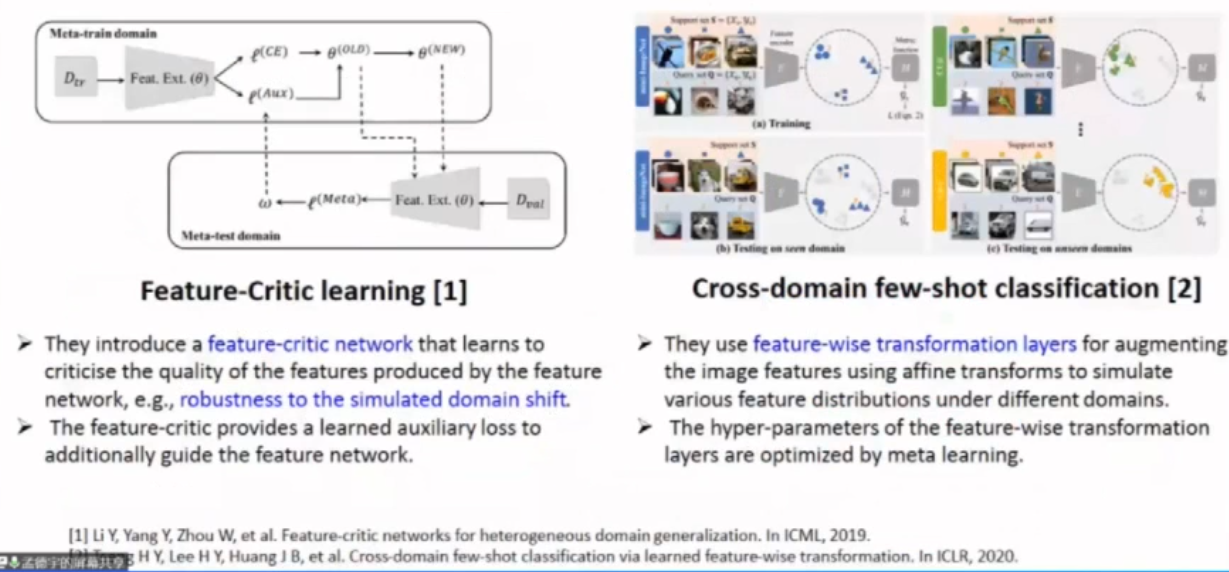

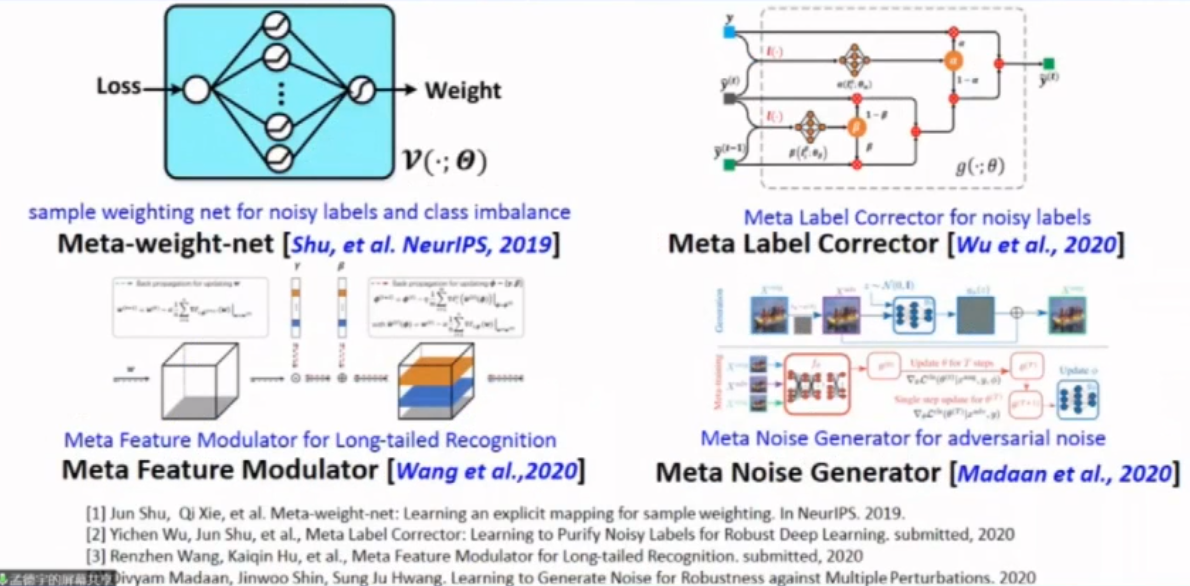

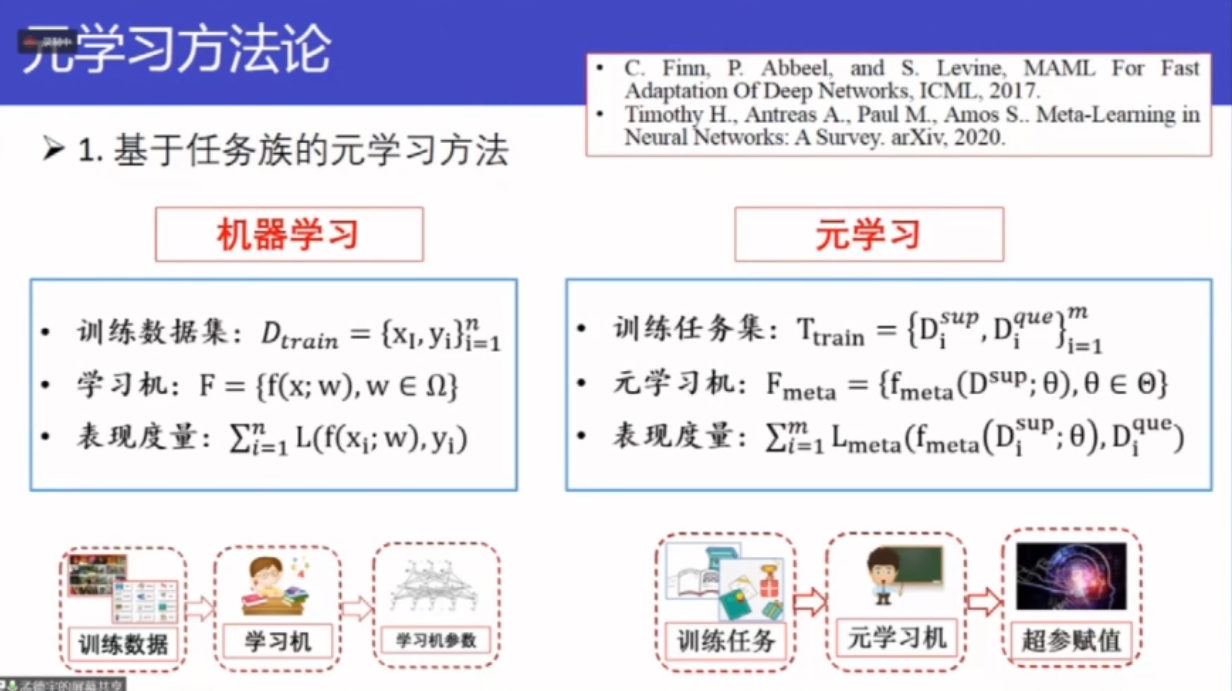

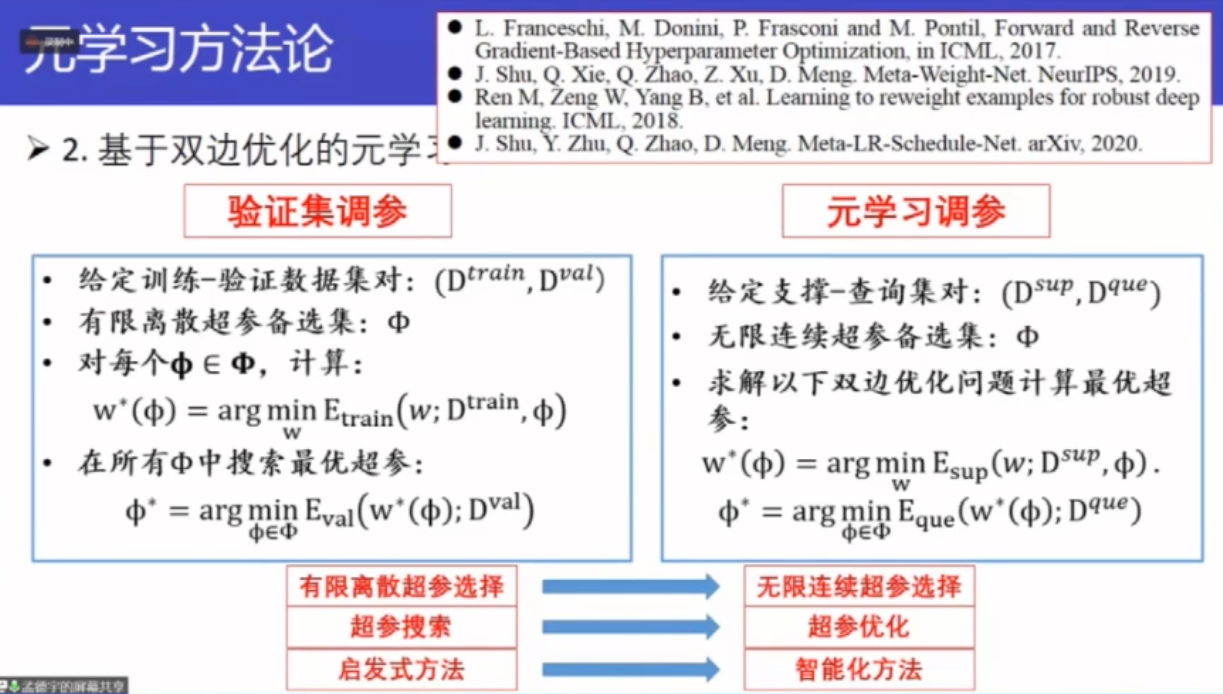

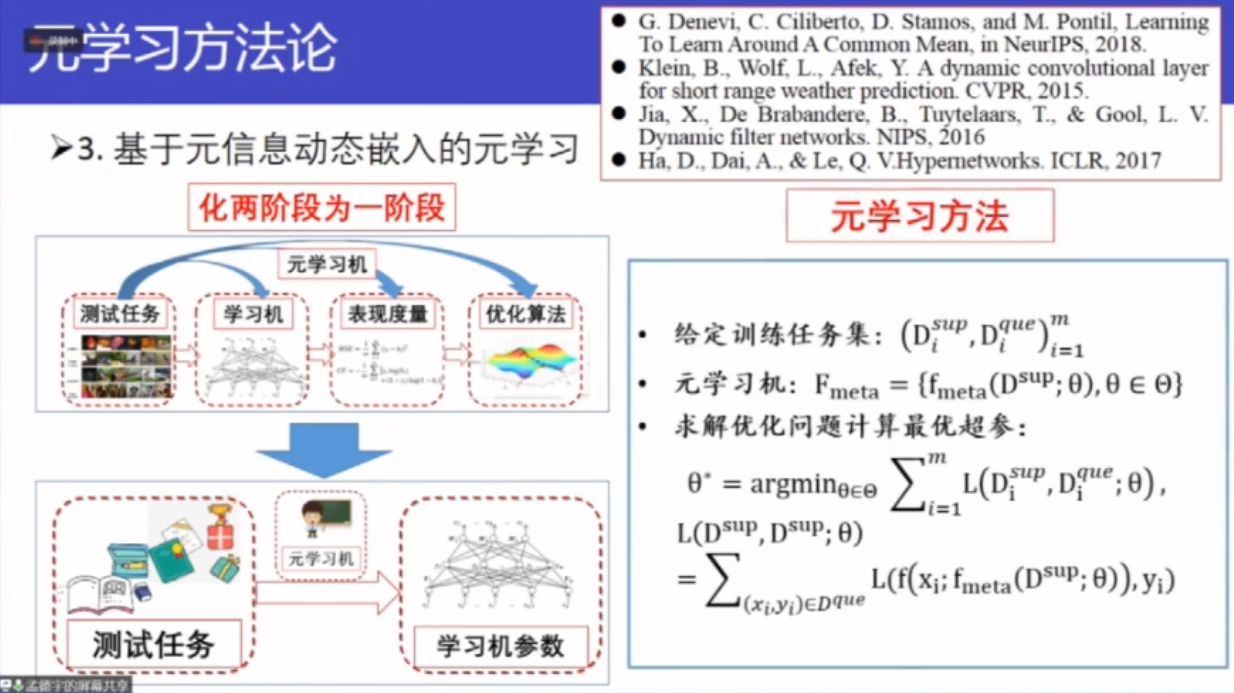

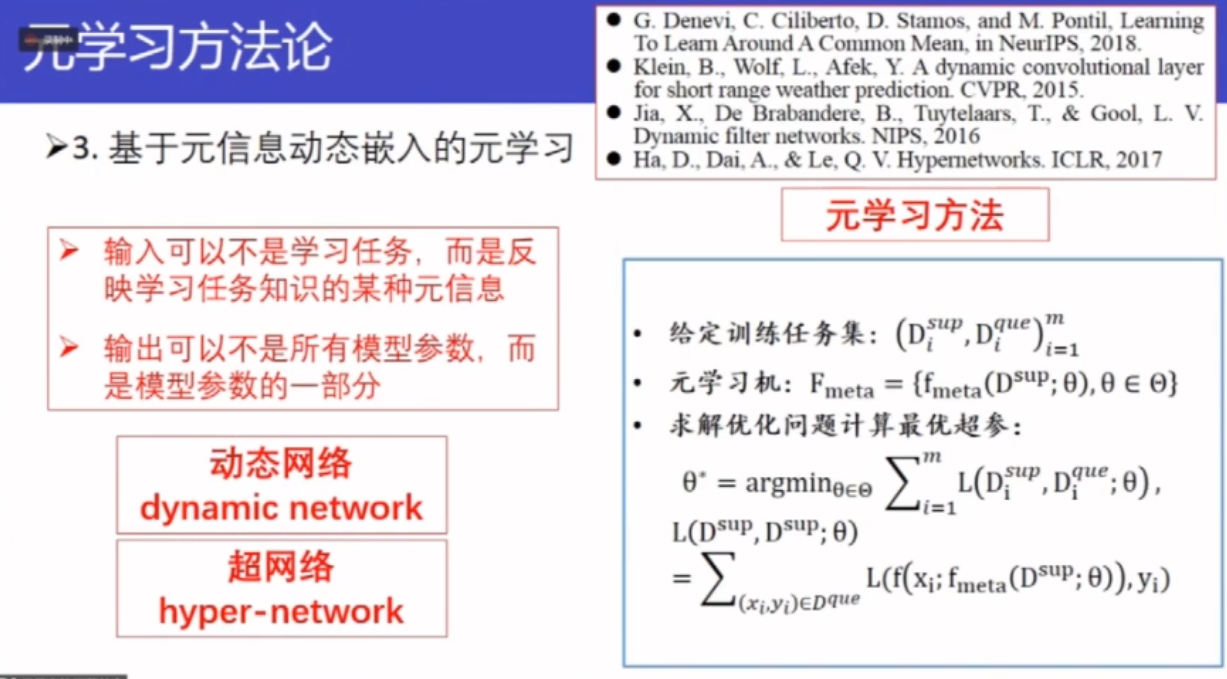

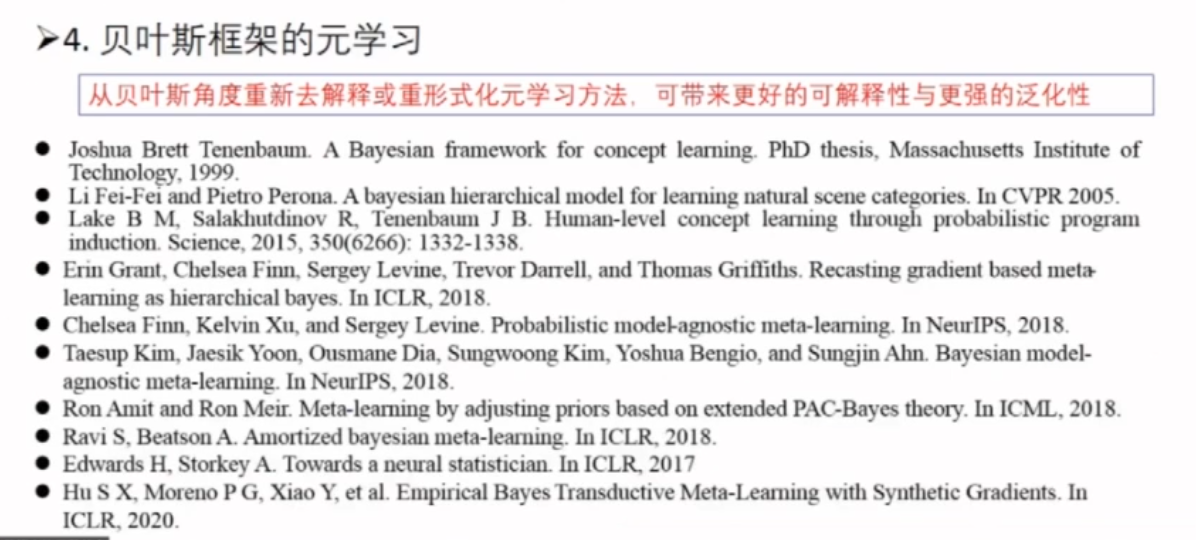

元学习

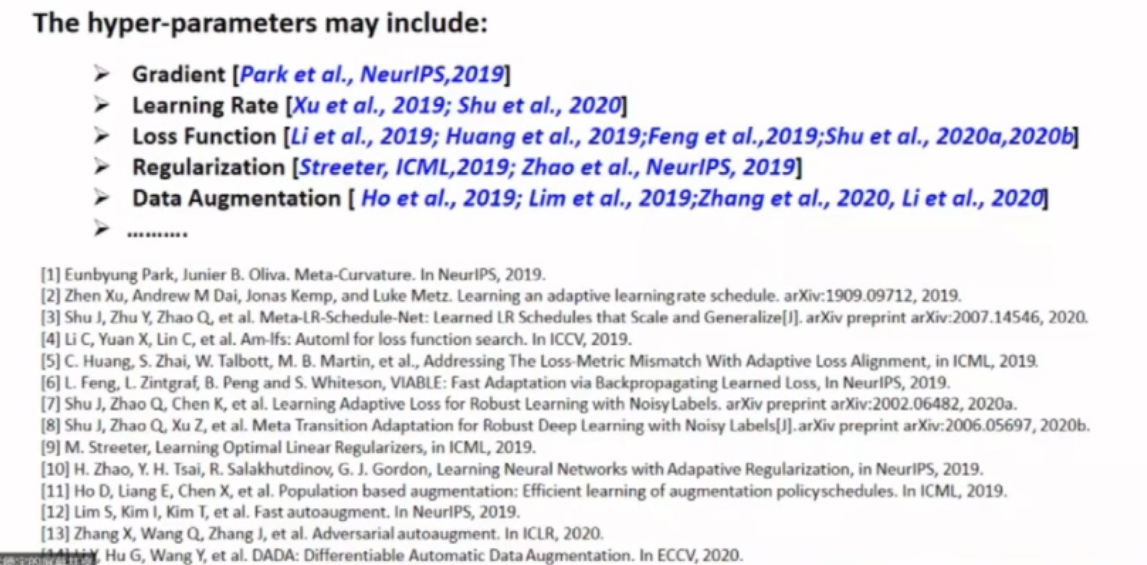

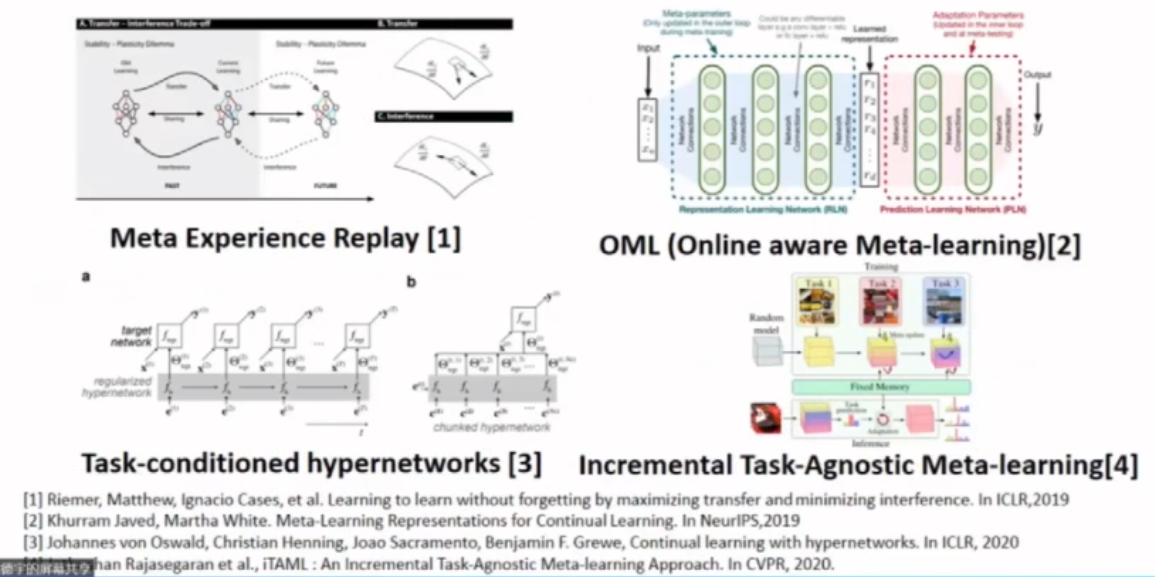

元学习方法论

元学习技术应用

- few-shot learning

- NAS

- hyper-parameter learning

- continual learning

- domain generalization

- learning with bias data