VALSE2020 重要主题年度进展回顾 (APR)

视觉生成GAN

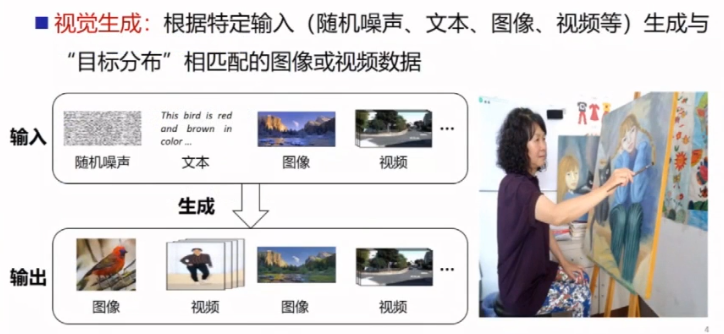

视觉生成背景介绍

视觉生成的应用:老电影着色,破损照片修复,真人变身漫画,角色AR化,医学研究

判别模型(CNN, SVM) vs 生成模型(VAE, GAN)

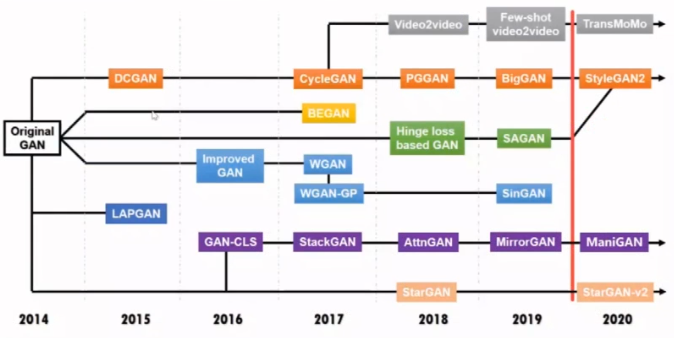

视觉生成典型问题及进展

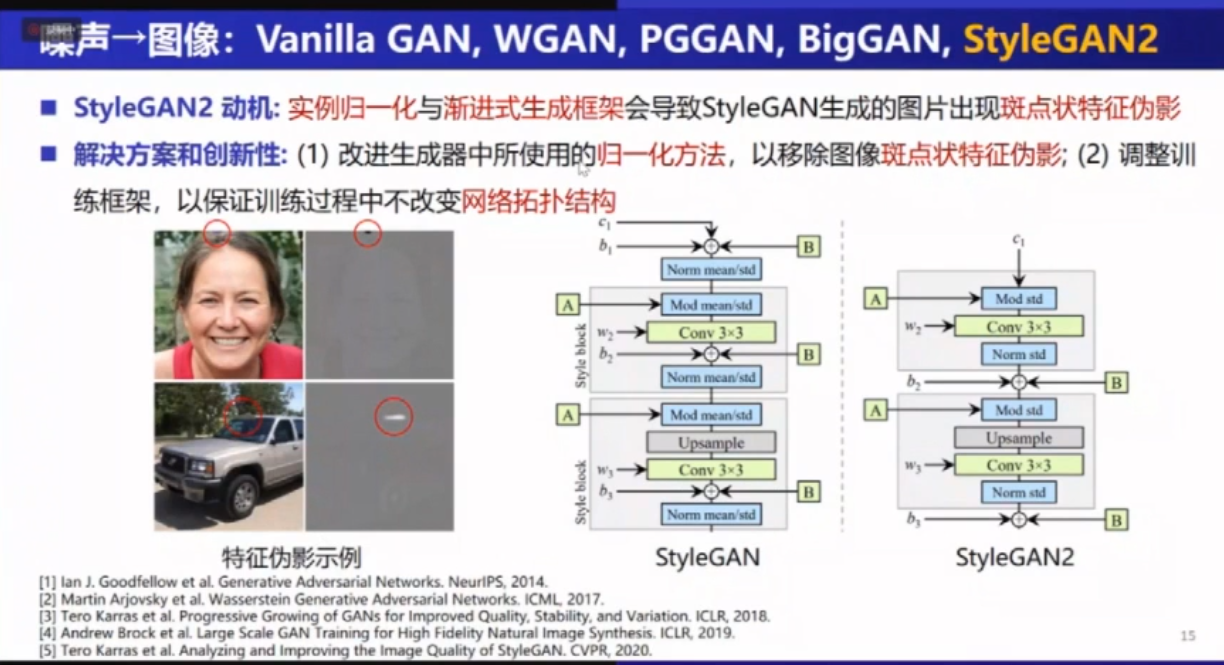

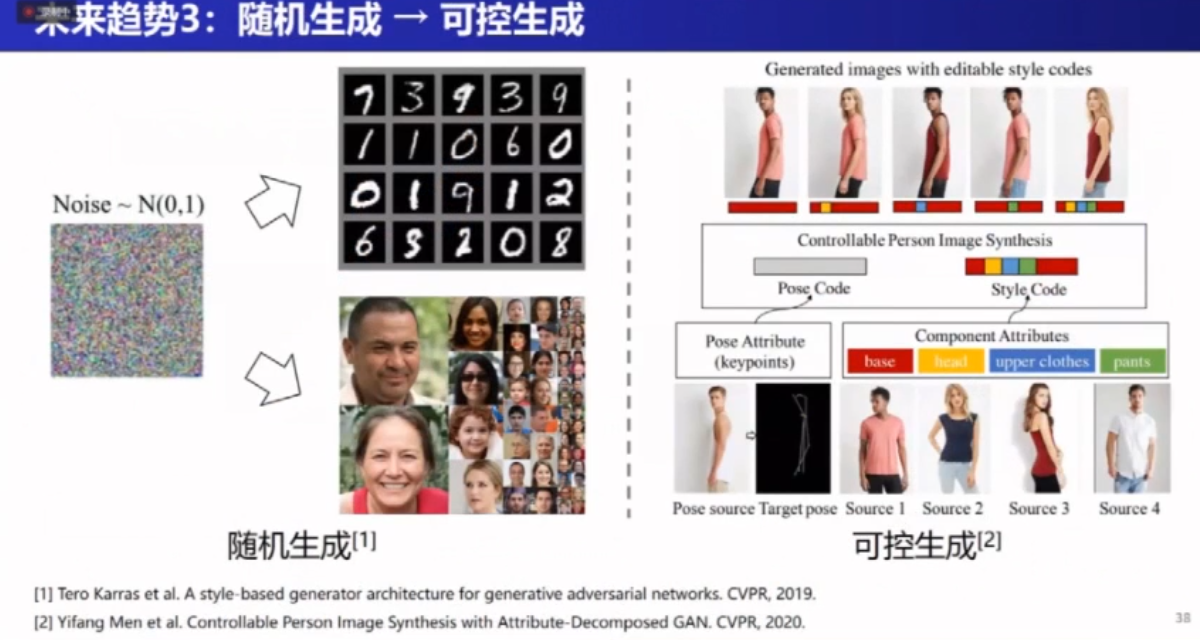

噪声–>图像

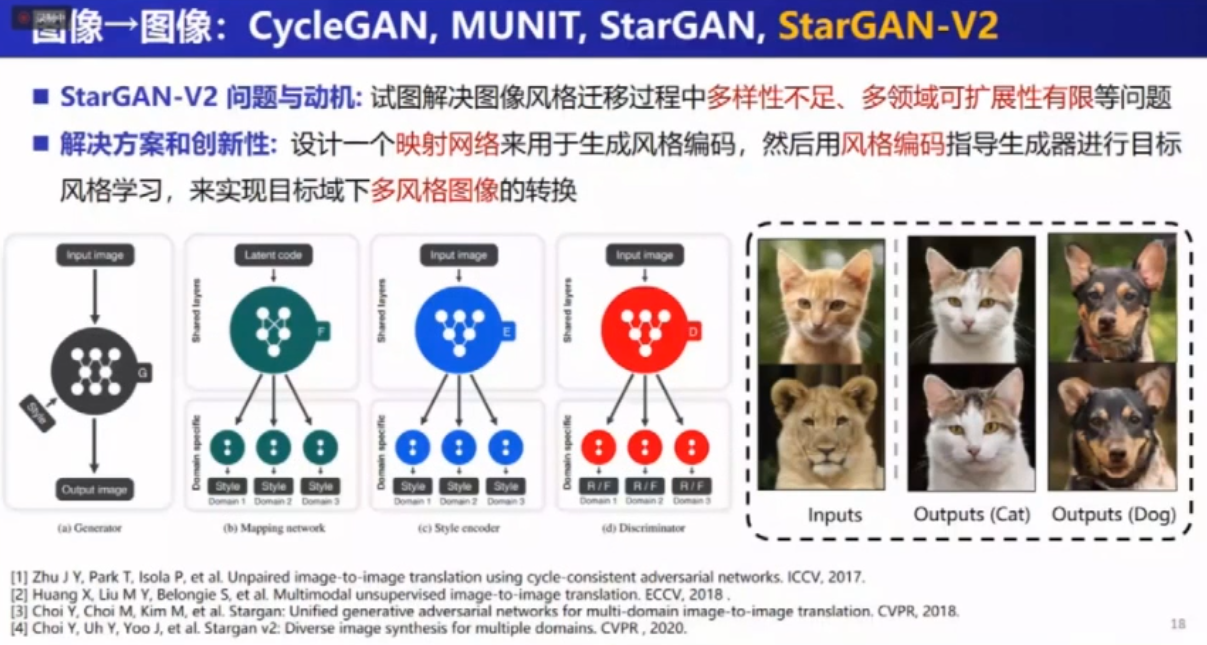

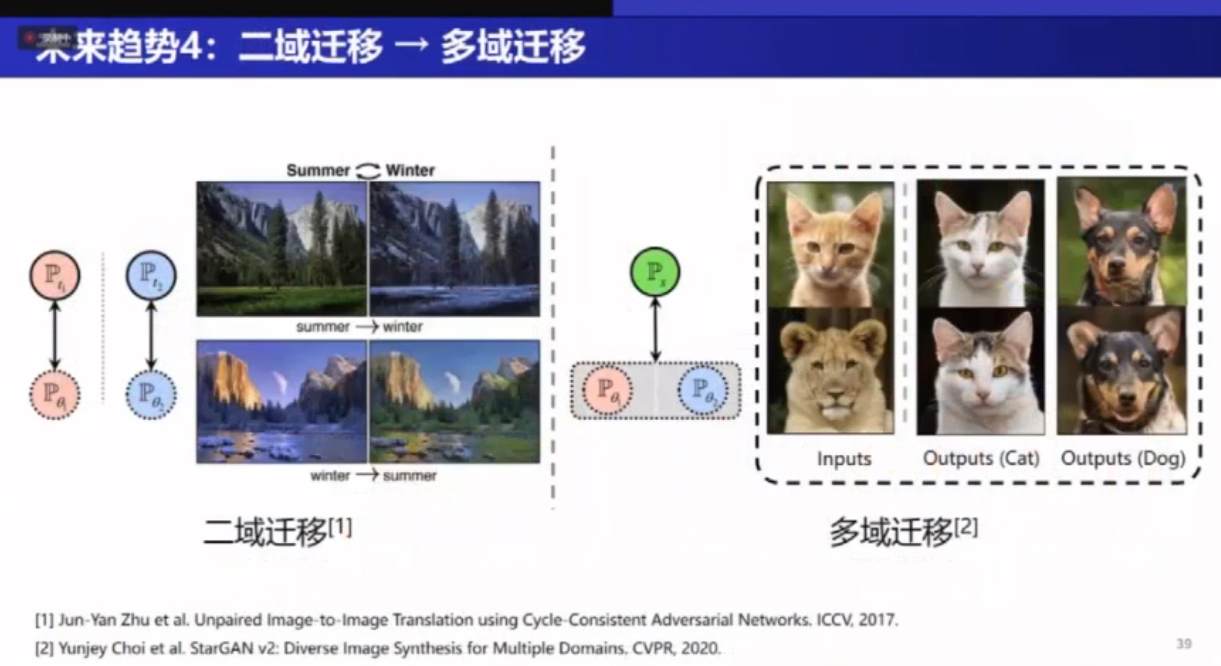

图像–>图像

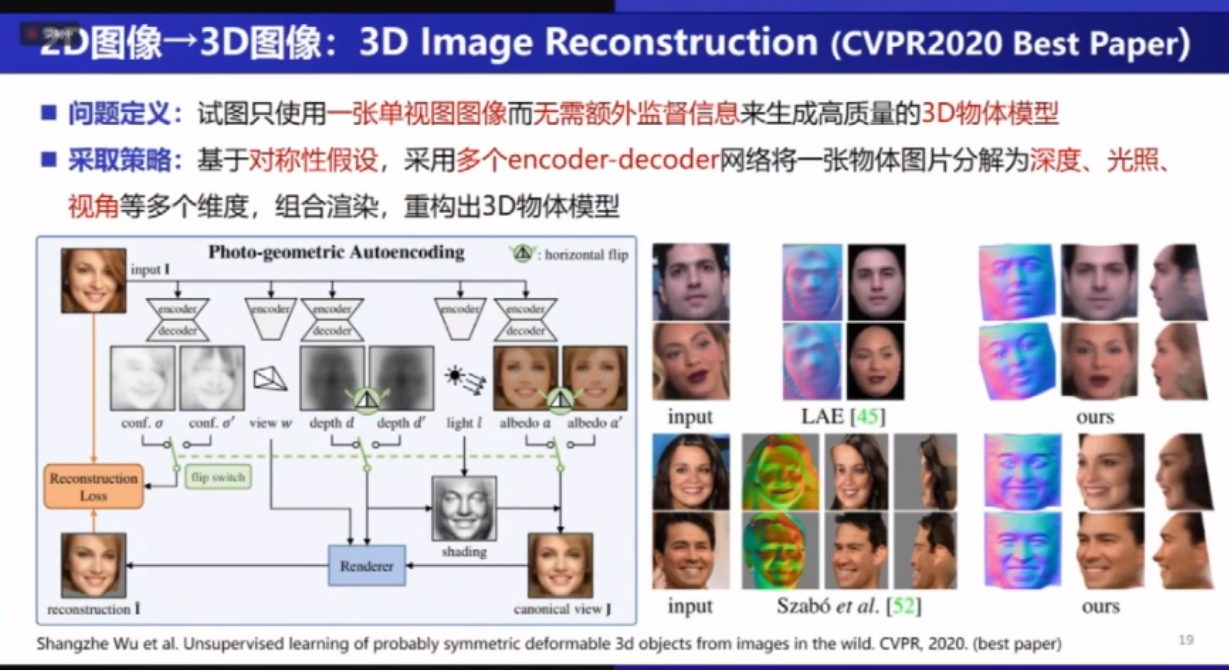

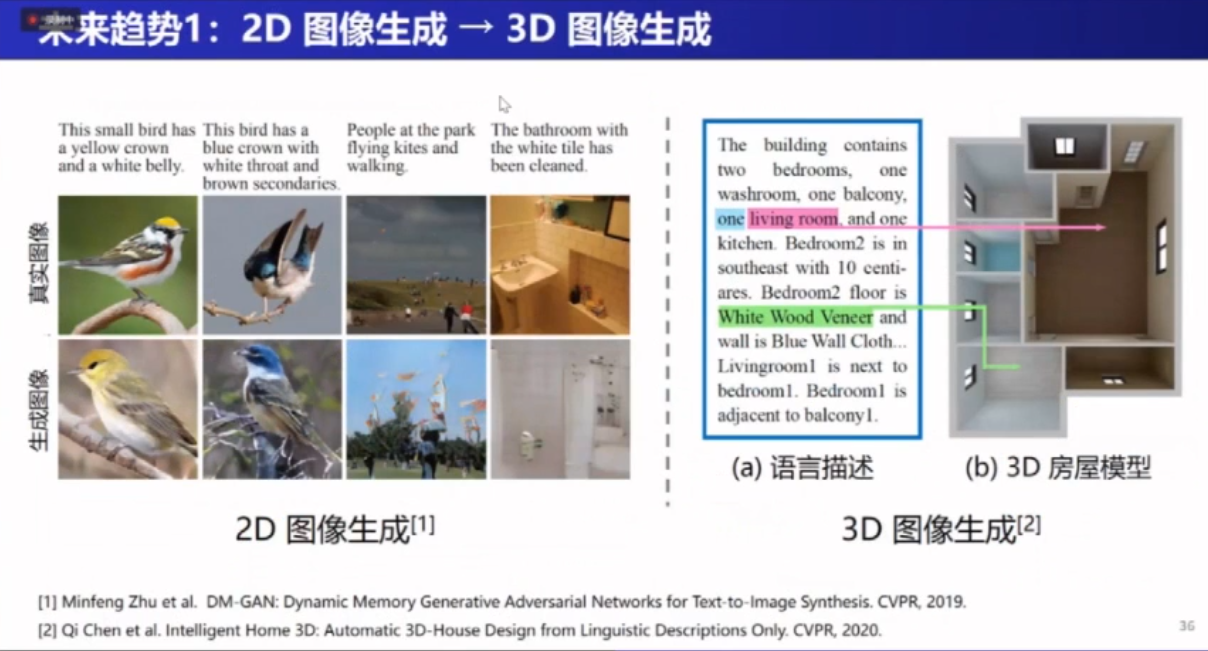

2D图像–>3D图像

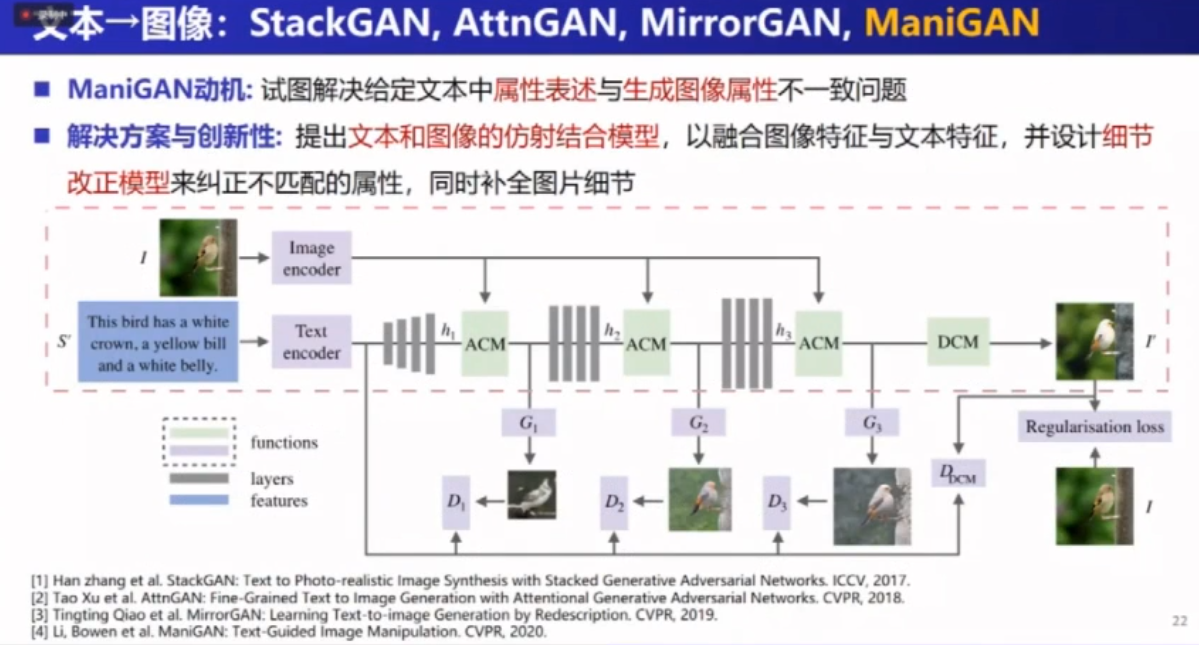

文本–>3D图像

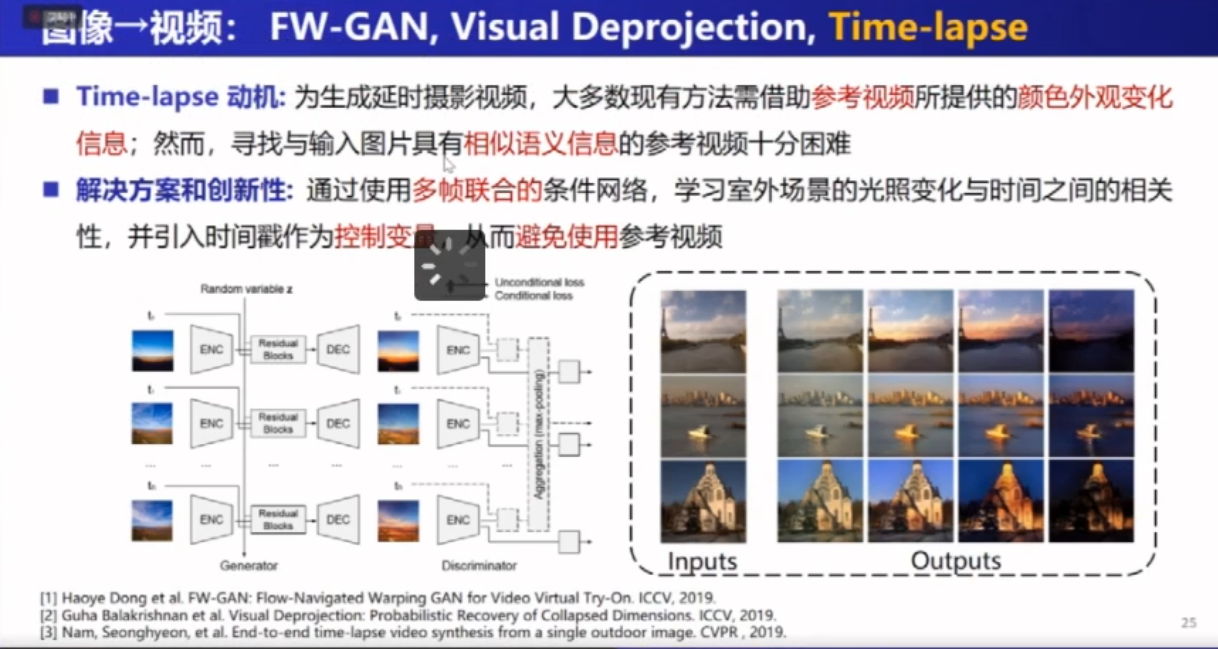

图像–>视频

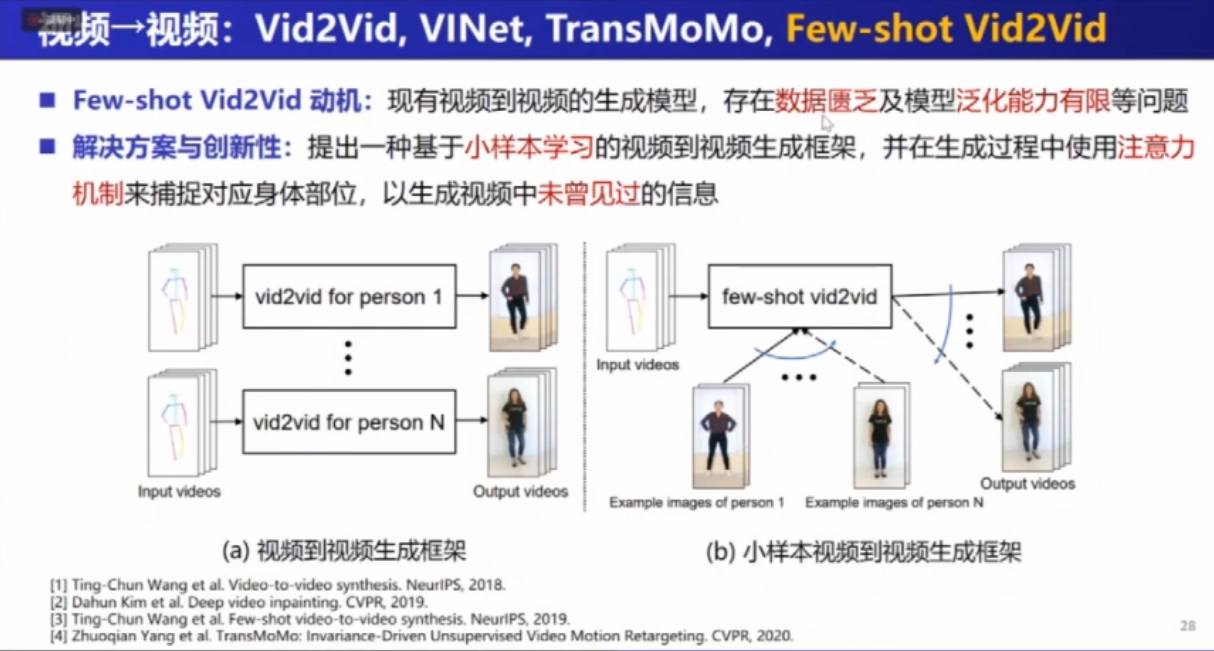

视频–>视频

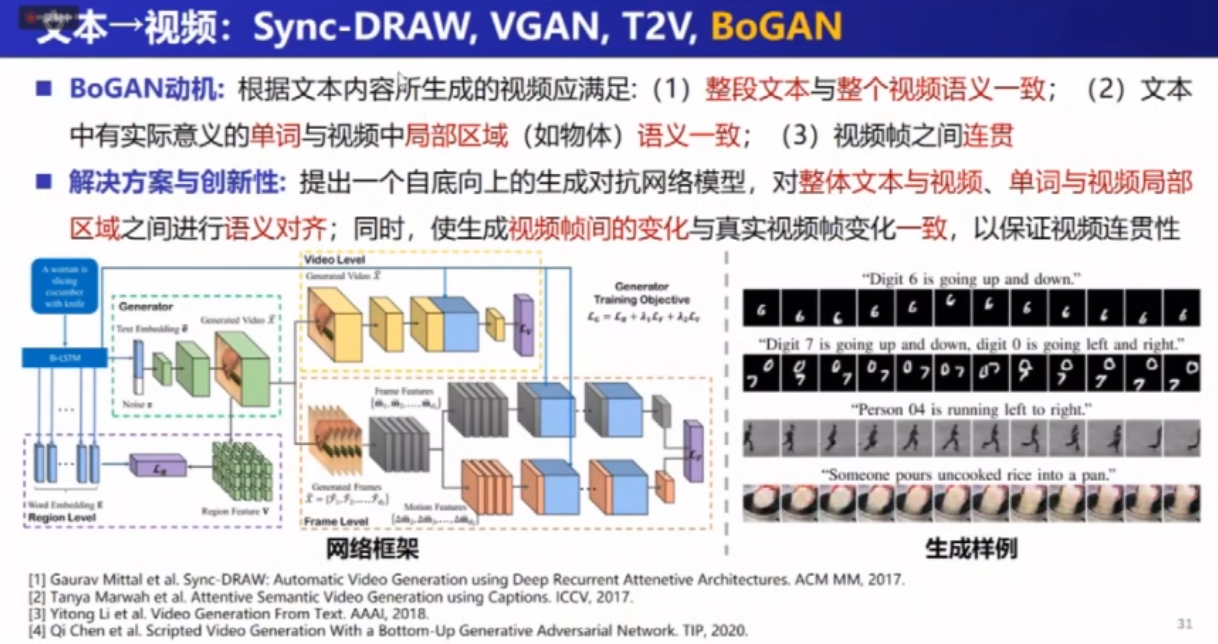

文本–>视频

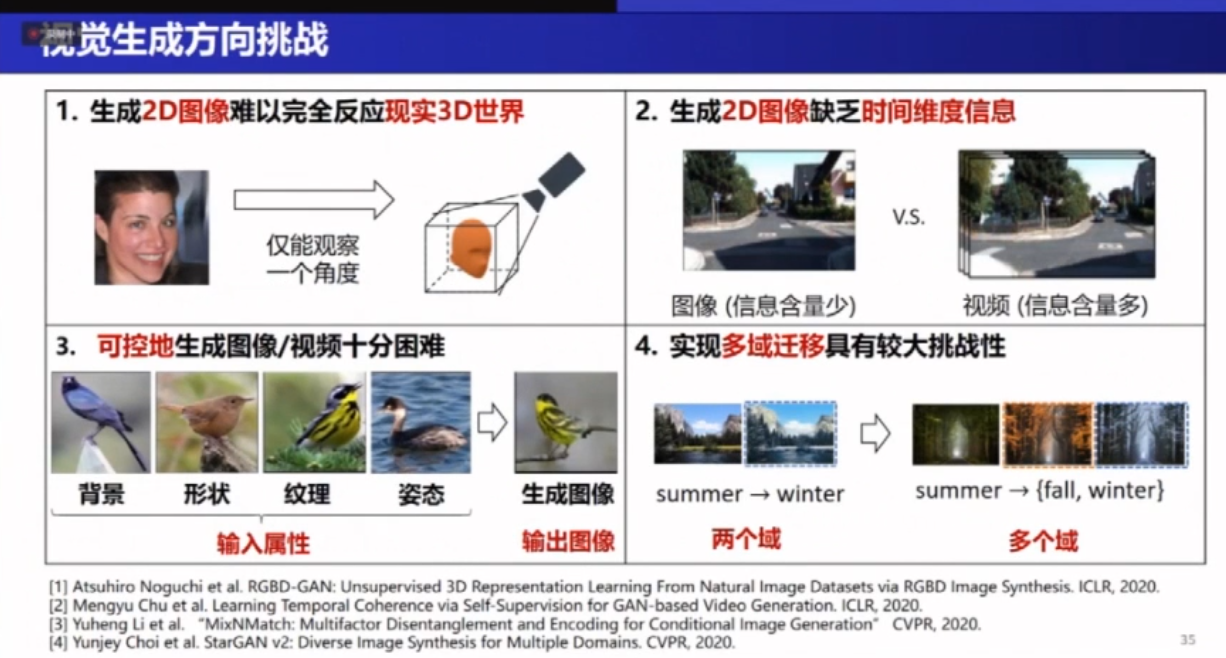

视觉生成挑战及未来趋势

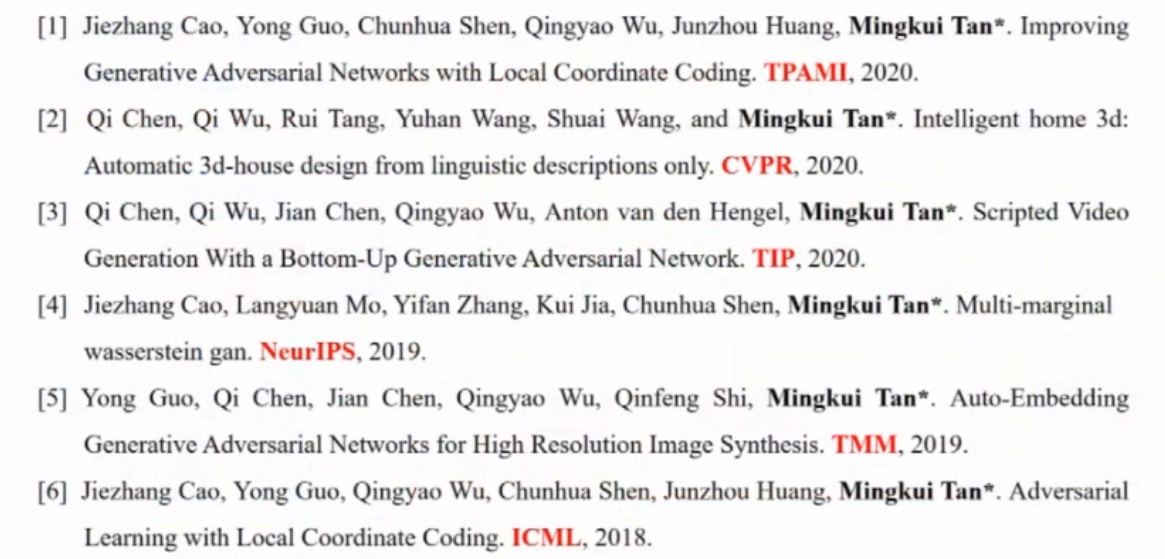

讲者(华南理工大学Mingkui Tan)相关工作

细粒度视觉识别

1. By localization-classification subnetworks

先定位(localize) pattern region,然后通过 pattern-level subnetworks 进行 feature fusion,从而更加鲁棒地细粒度特征表示。

- Mask-CNN (Xiushen Wei)

- Selective sparse sampling for fine-grained image recognition (ICCV2019, 中科院)

- Filtration and Distillation (AAAI2020, 中科院)

- Graph-propagation based correlation learning for weakly supervised fine-grained image classification (AAAI2020, 大连理工)

- weakly supervised fine-grained image classification via gaussian mixture model oriented discriminative learning (CVPR2020, 大连理工)

2. By end-to-end feature encoding

网络内部的特征交互(cross-layer),针对某些特定任务设计特定的损失函数,从而实现端到端的细粒度识别模型。

- Bilinear CNN

- Learning deep bilinear transformation for fine-grained image representation (NIPS2019)

- cross-X learning for fine-grained visual categorization (ICCV2019)

- fine-grained recognition: accounting for subtle differences between similar classes (AAAI2020) 通过 diversification block + gradient boosting 使网络聚焦在易错分的类别上

- fine-grained image-to-image transformation towards visual recognition (CVPR2020) 细粒度图像的GAN

3. By leveraging attention mechanisms

- look closer to see better (CVPR2017) 多尺度注意力机制

- learning a mixture of granularity-specific experts for fine-grained categorization (ICCV2019) 多粒度的 experts 系统。

- attention convolutional binary neural tree for fine-grained visual categorization (CVPR2020) 注意力机制 + 树结构

4. By contrastive learning manners

learning attentive pairwise interaction for fine-grained classification (AAAI2020) 输入为一对图片,mutual vector learning + gate vector generation + pairwise interaction

channel interaction networks for fine-grained image categorization (AAAI2020)

5. Recognition with web data

- web-supervised network with softly update-drop training for fine-grained visual classification (AAAI2020) 通过交叉熵检测出噪声大的图像

6. Recognition with limited data

- piecewise classifier mapping: learning fine-grained learners for novel categories with few examples

- multi-attention meta learning for few-shot fine-grained image recognition (IJCAI2020)

- revisiting pose-normalization for fine-grained few-shot recognition (CVPR2020)

7. Fine-grained datasets

- RPC: A large-scale retial product checkout dataset (arxiv2019)

自监督学习

Background: Self-supervised

预训练模型本身没有直接的应用价值,不管是监督/无监督的自监督学习算法,都是为了下游任务。

自监督好处:

- human designed labels may be sub-optimal

- unleash the power of untapped data

- how human may learn

Self-supervised learning on ImageNet

预训练模型在ImageNet上进行图像分类几乎达到监督学习的精度。

个体/样本分类:对单一图片进行图像增强,对于具有1M张图片的数据集,我们拥有了1MxN张图片,对应了1M个类别。

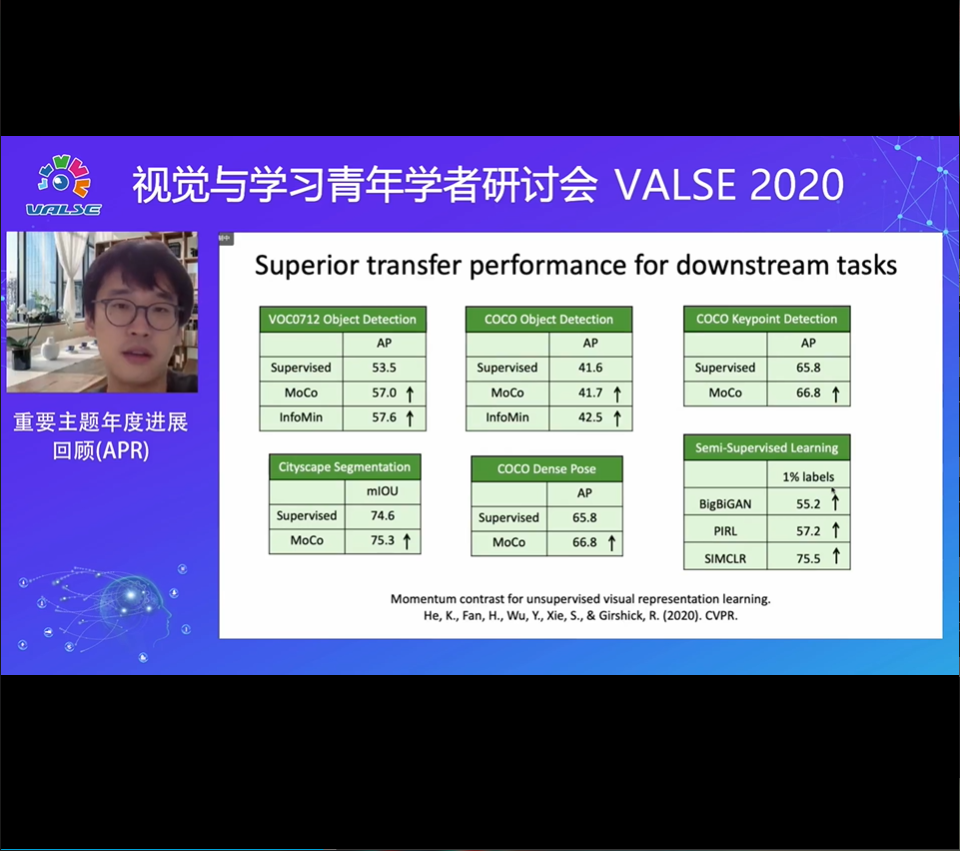

Self-supervised > Supervised

上图中,自监督学习得到的预训练模型在下游任务中更有效!

上图文章给出的三个结论:

- 迁移学习迁移的主要是底层特征而不是高层的语义特征。

- 无监督学习可以保持更好的空间位置关系。有监督的预训练模型会损失空间信息。

- 提出了更好的监督学习方法

Self-supervised learning via GAN

- BigBiGAN 2019 (imagenet top1 55.6%)

- Image GPT 2020 (imagenet top1 69.0%)

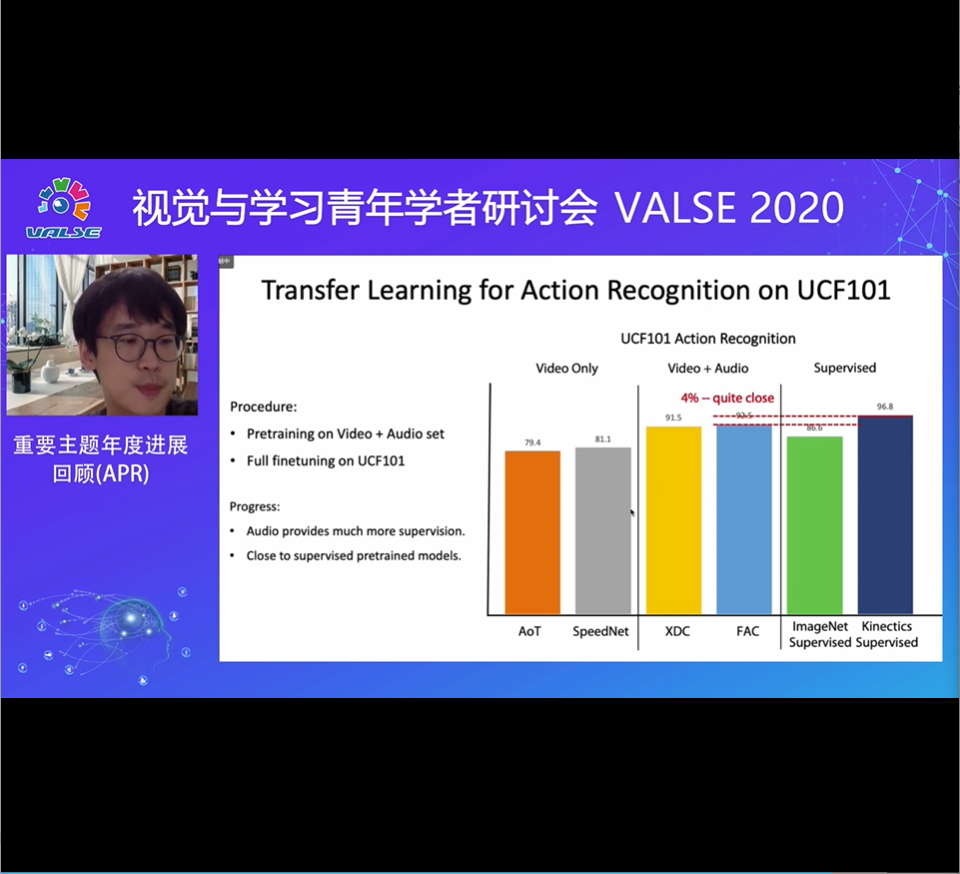

Self-supervised learning on Videos

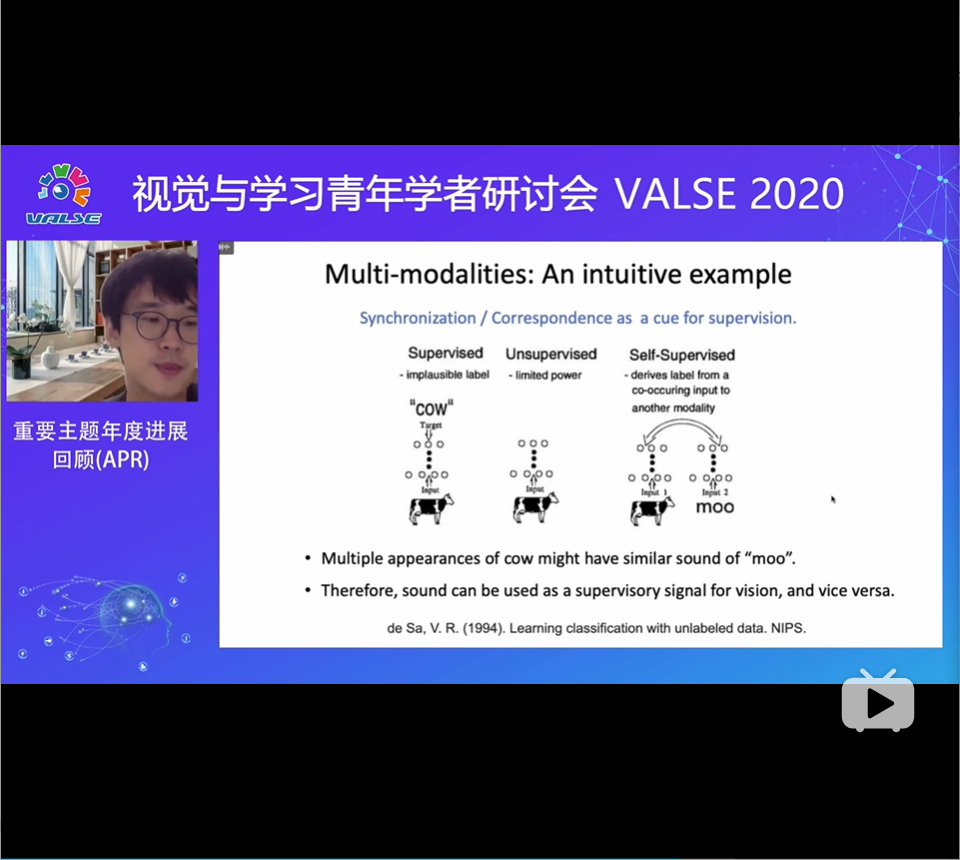

Self-supervised learning on multi-modalities

采样牛的图片和声音,不同的牛虽然RGB图片不同但是叫声是相似的,所以可以通过音频信息辅助视觉信息的学习。

时序上不同的audio和RGB都是负标签。

Future Directions

- designing better pretrained models for downstream tasks (as opposed to focusing on ImageNet classification)

- multi-modality self-supervised pretraining and applications

- smarter ways to automatically collect useful data/labels